Reimagining Post-Visit Care with an AI Copilot that Learns and Adapts

We built a decision-support Copilot that complements clinical expertise with explainable, adaptive AI— turning it into a trusted colleague at every step of care.

Intelligent Assistance

Electronic Health Records (EHR)

Agentic AI

AI Copilot

Decision support assistant

/ BACKGROUND

Healthcare doesn't need more clicks — it needs smarter decisions made faster.

Clinicians orchestrate follow-up care after every patient visit. This should take 3 minutes. It takes 14. In that gap, 40% of necessary follow-ups slip through cracks. Preventable readmissions spike. Clinician burnout deepens.

The problem isn't the lack of information. It's that information lives in silos, decisions go unmade, and actions fall through cracks.

/ THE INSIGHT: FROM FEATURES TO DECISION ECOSYSTEMS

What We Discovered

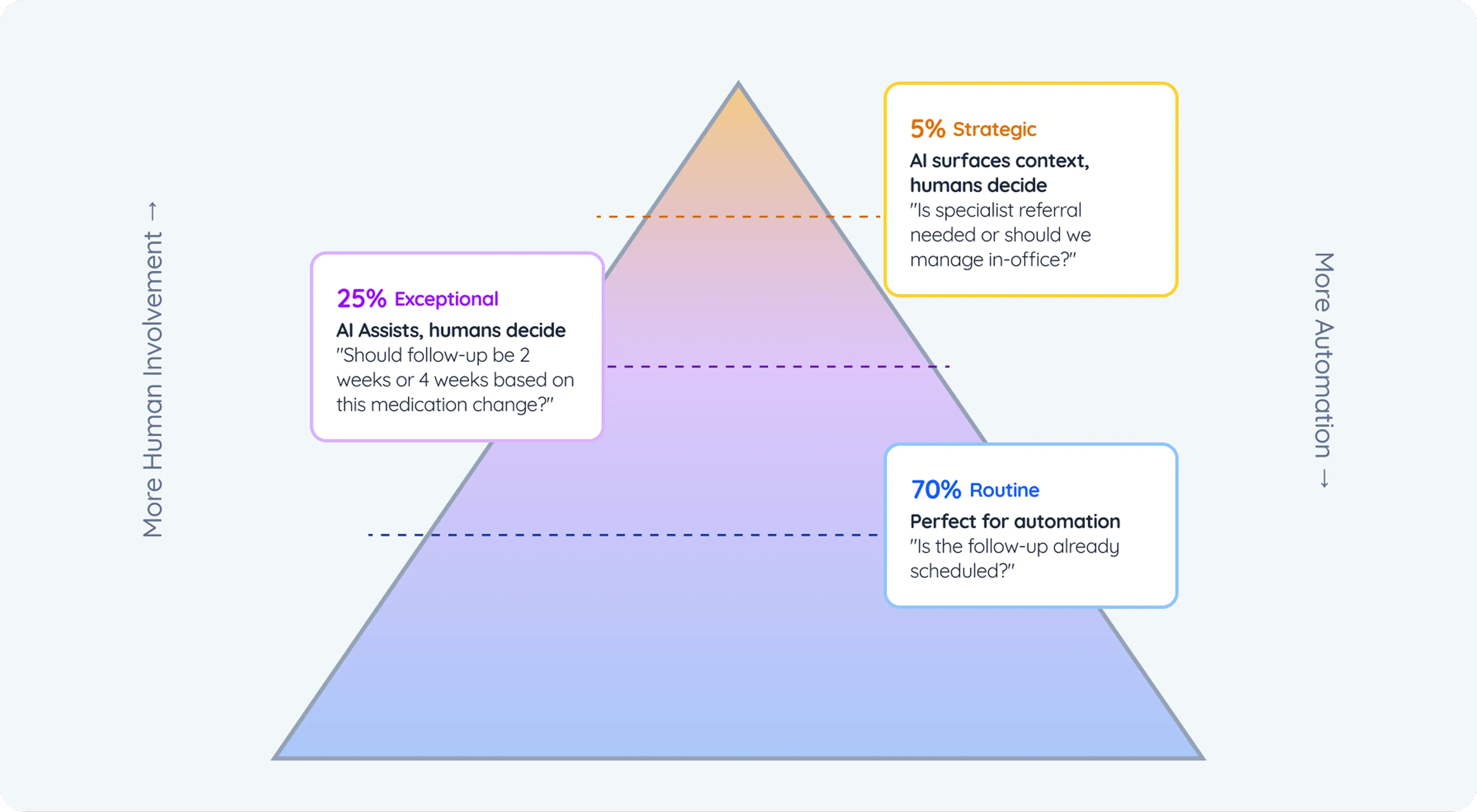

When we mapped post-visit follow-up workflows, we found 3 types of AI intervention opportunities:

The Core Problem

Current systems force clinicians to manually handle all three decision types. They navigate 6+ systems, apply mental checklists, and often skip the tedious gap-detection step entirely.

Result: Care quality depends on clinician efficiency on any given day, not on actual clinical need.

/ Approach

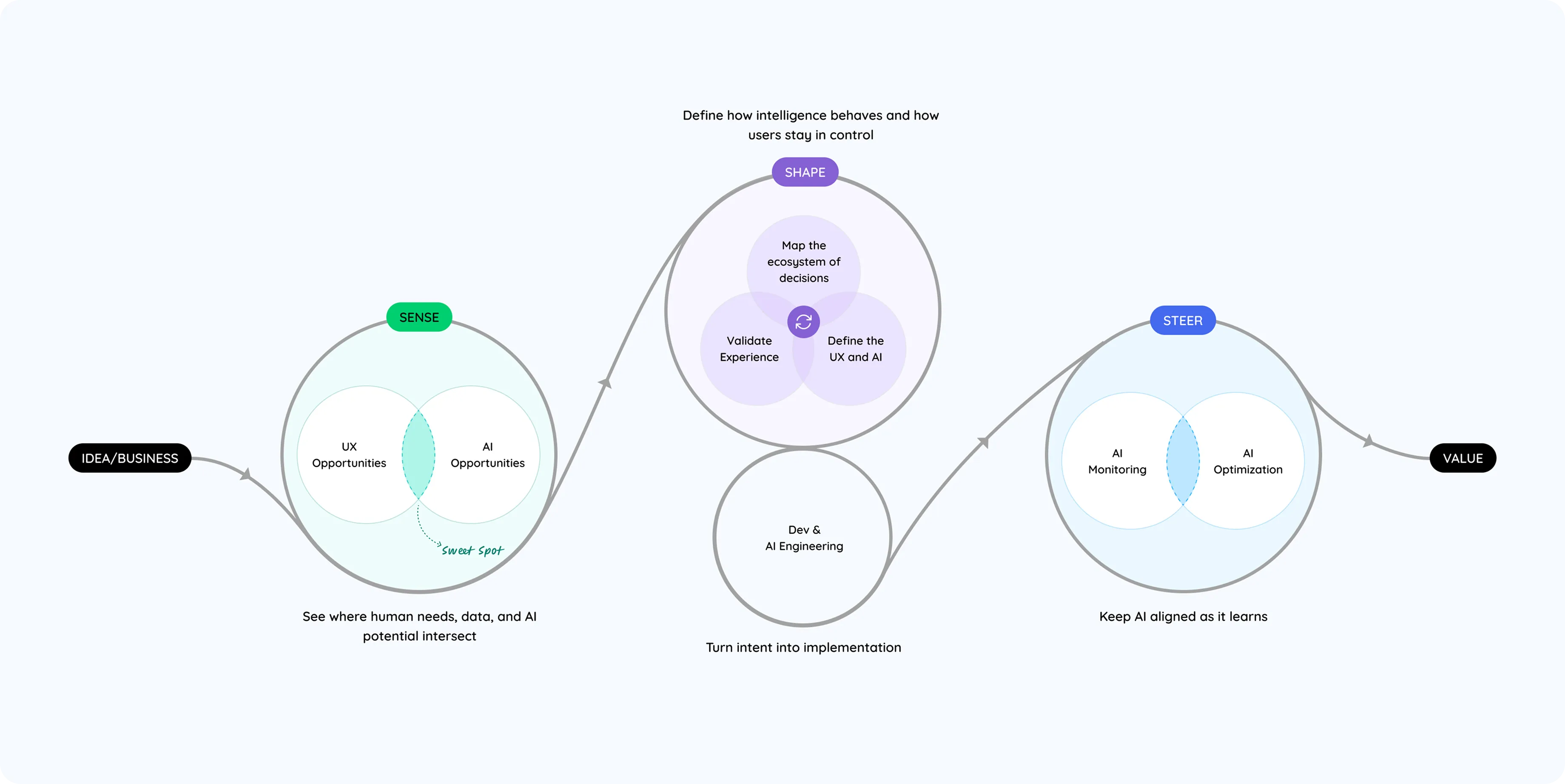

Sense → Shape → Steer: Designing AI as a Trusted Colleague

We followed our Sense–Shape–Steer methodology — a three-phase AI-first design approach that ensures AI complements human expertise instead of replacing it. The goal: to build a system that behaves like a thoughtful colleague, not an autopilot.

/ PHASE 1 - SENSE

Understanding Decision Boundaries & Data Realities

Mapping the Ecosystem

We began by observing real workflows and interviewing clinicians to uncover how decisions are actually made:

Assessing the Data Landscape

AI is only as smart as the data it learns from. We audited data sources across EHRs, lab systems, and protocols — assessing quality, accessibility, and trust.

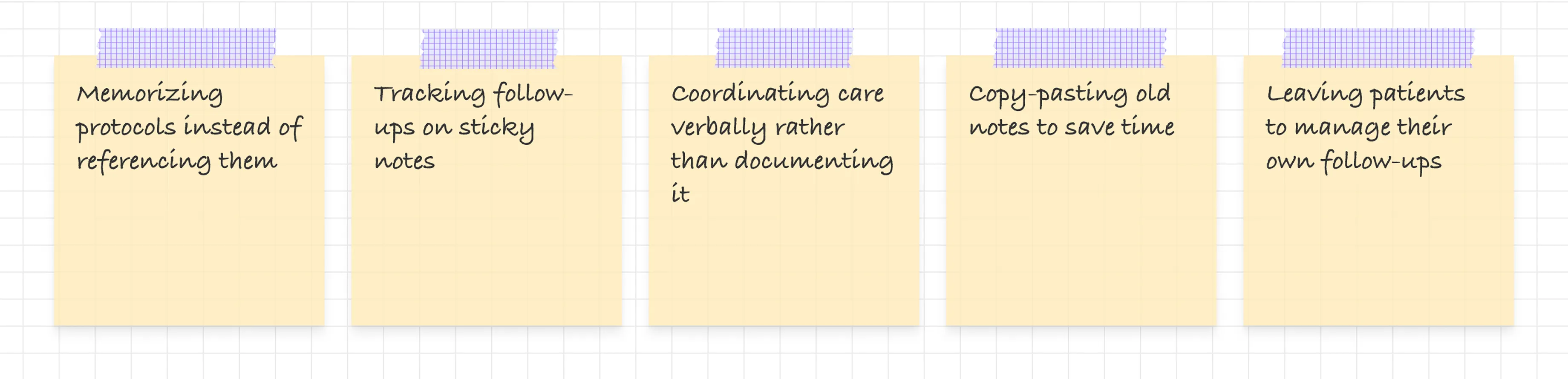

Clinician Workarounds

To cope, clinicians had built their own micro-systems:

Where AI Actually Fits

We mapped potential AI interventions and prioritized three for the MVP — balancing clinical value and technical feasibility:

1. Protocol Matching

→ Recognize diagnoses and surface relevant care protocols

Example: "Type 2 Diabetes → A1C check due 3 months after medication start."

2. Gap Detection

→ Compare what should be scheduled vs. what is scheduled

Example: "Labs ordered but no appointment scheduled — gap detected."

3. Autonomous Task Creation

→ Generate structured tasks for the right team members

Example: "Nurse: finalize lab order. Admin: call patient to confirm."

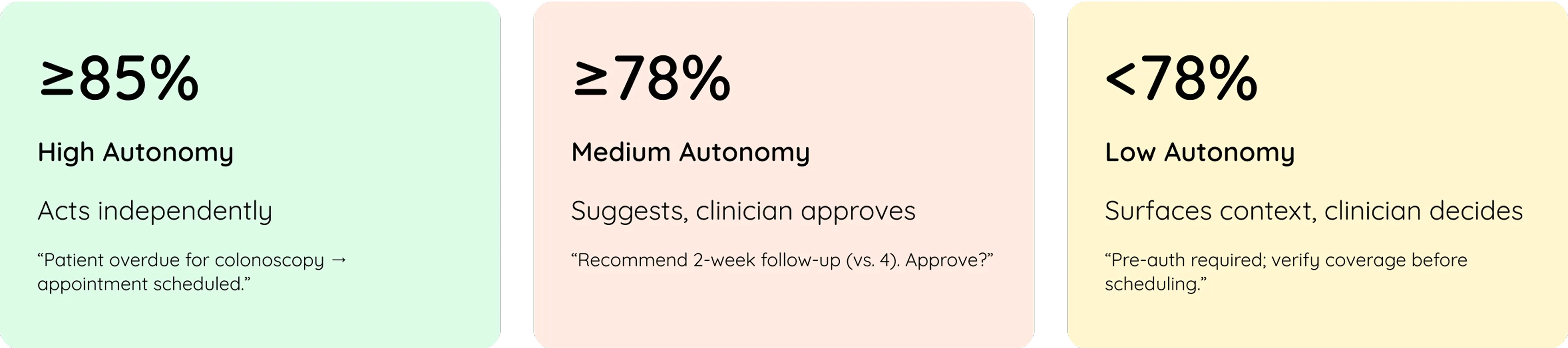

Defining Autonomy Boundaries

We codified when the AI should act, suggest, or defer, based on confidence thresholds and decision criticality.

Principle: Automate only what's predictable, suggest what's contextual, and surface what's complex.

/ PHASE 2 - SHAPE

Designing AI Behavior & Interaction

Once we knew where AI belonged, we turned our focus to how it should behave — not as a tool, but as a teammate.

Defining the AI's Character

We designed the AI as an Expert Colleague, not a passive assistant.

It:

- Acts decisively on routine tasks

- Suggests respectfully on complex ones

- Explains its reasoning transparently

- Admits uncertainty ("I'm 78% confident")

- Learns from overrides and adapts

Trigger Moments: When AI Steps In

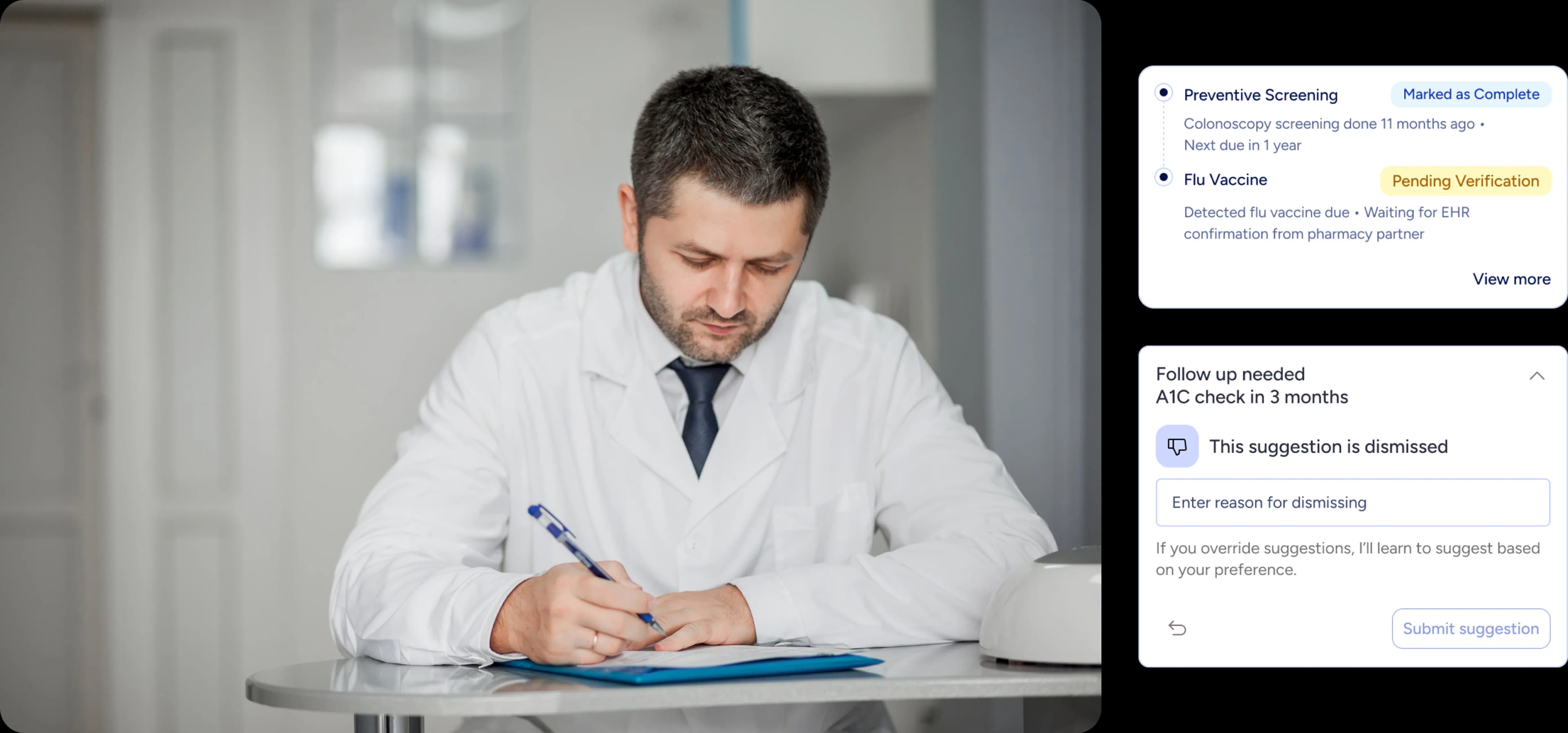

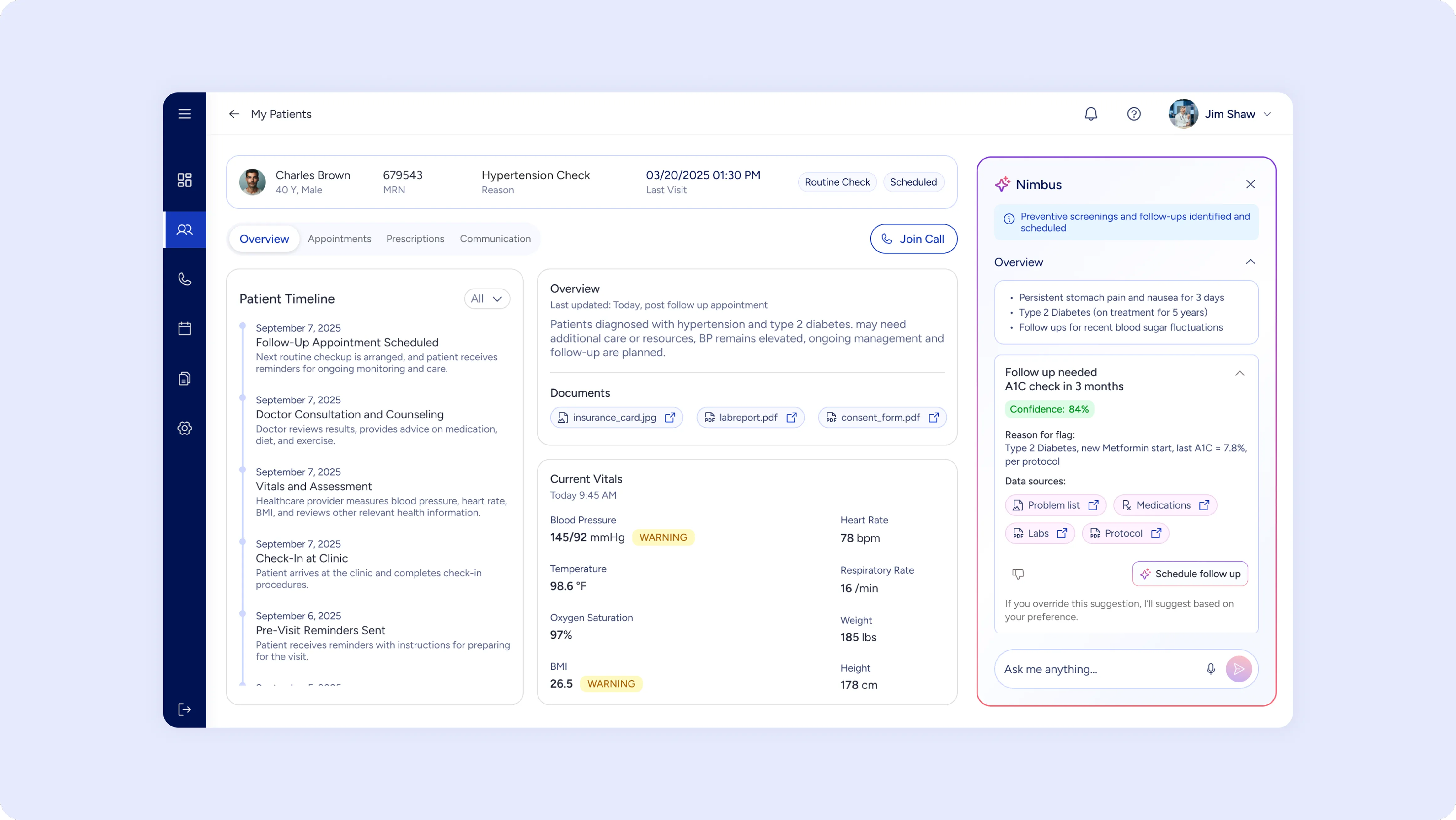

Transparency by Design

Every recommendation includes:

- Confidence level ("84% confident")

- Why this suggestion (diagnosis, data sources, protocol reference)

- Data provenance (EHR sections, lab dates, medication history)

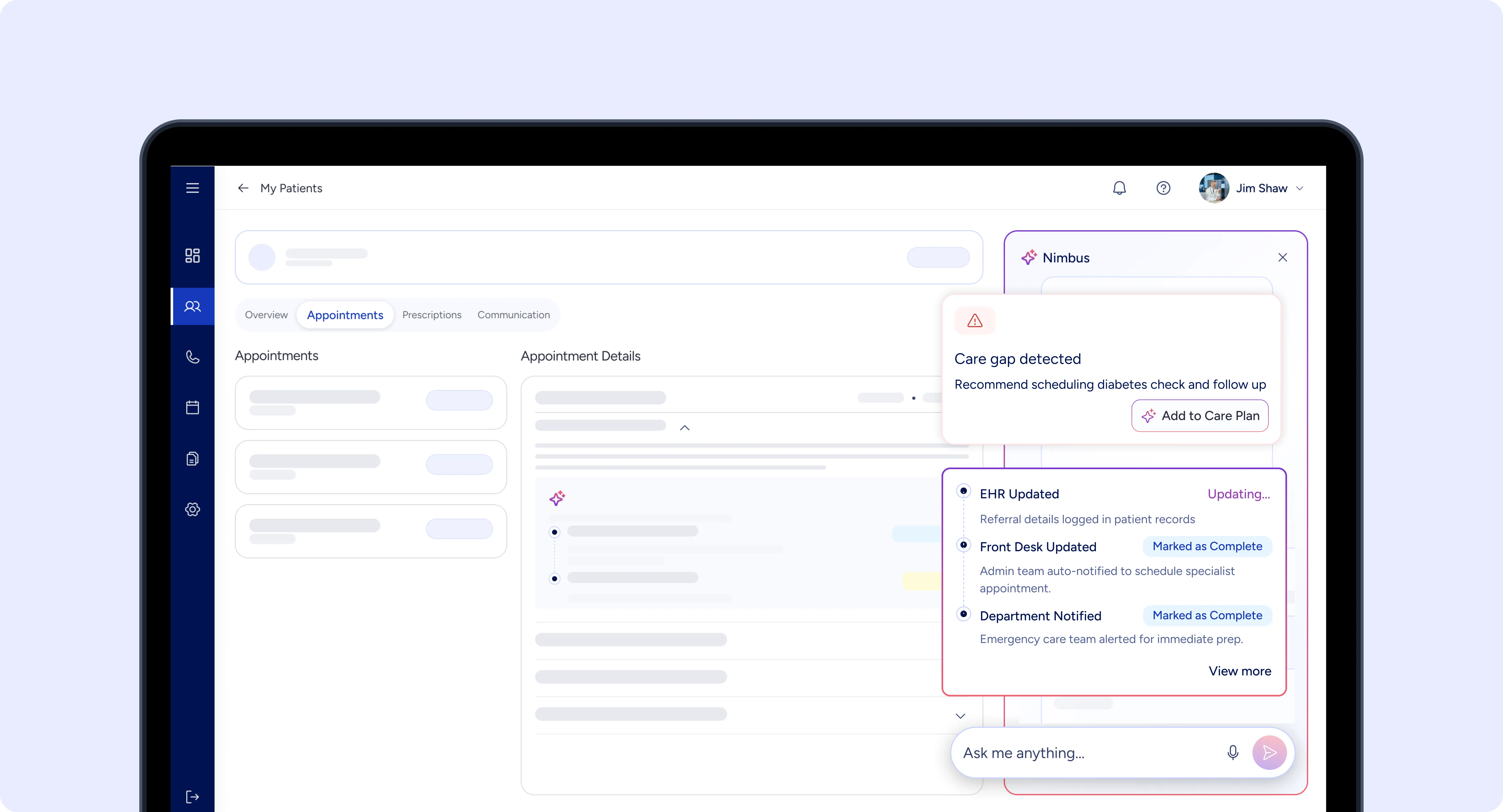

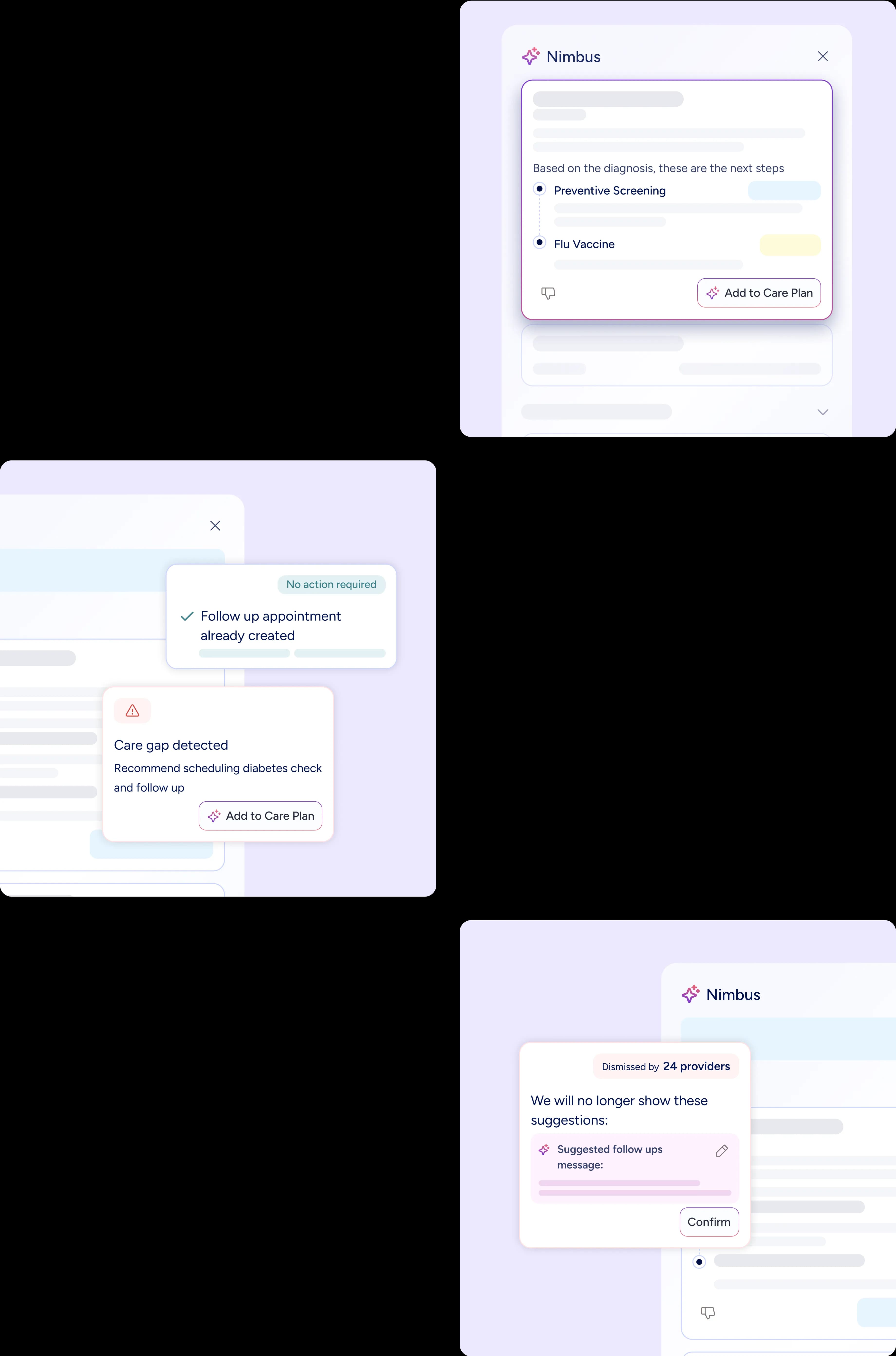

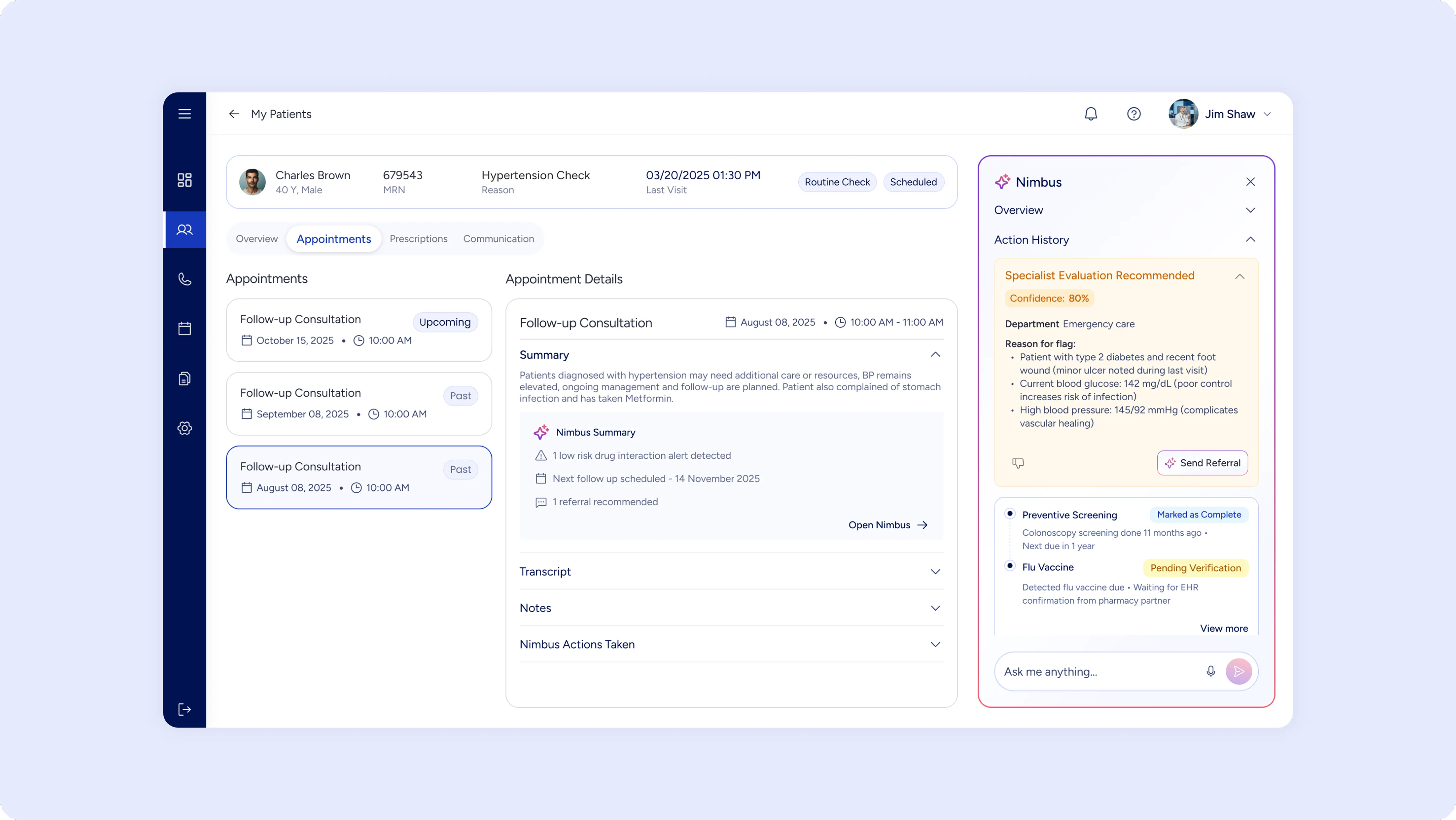

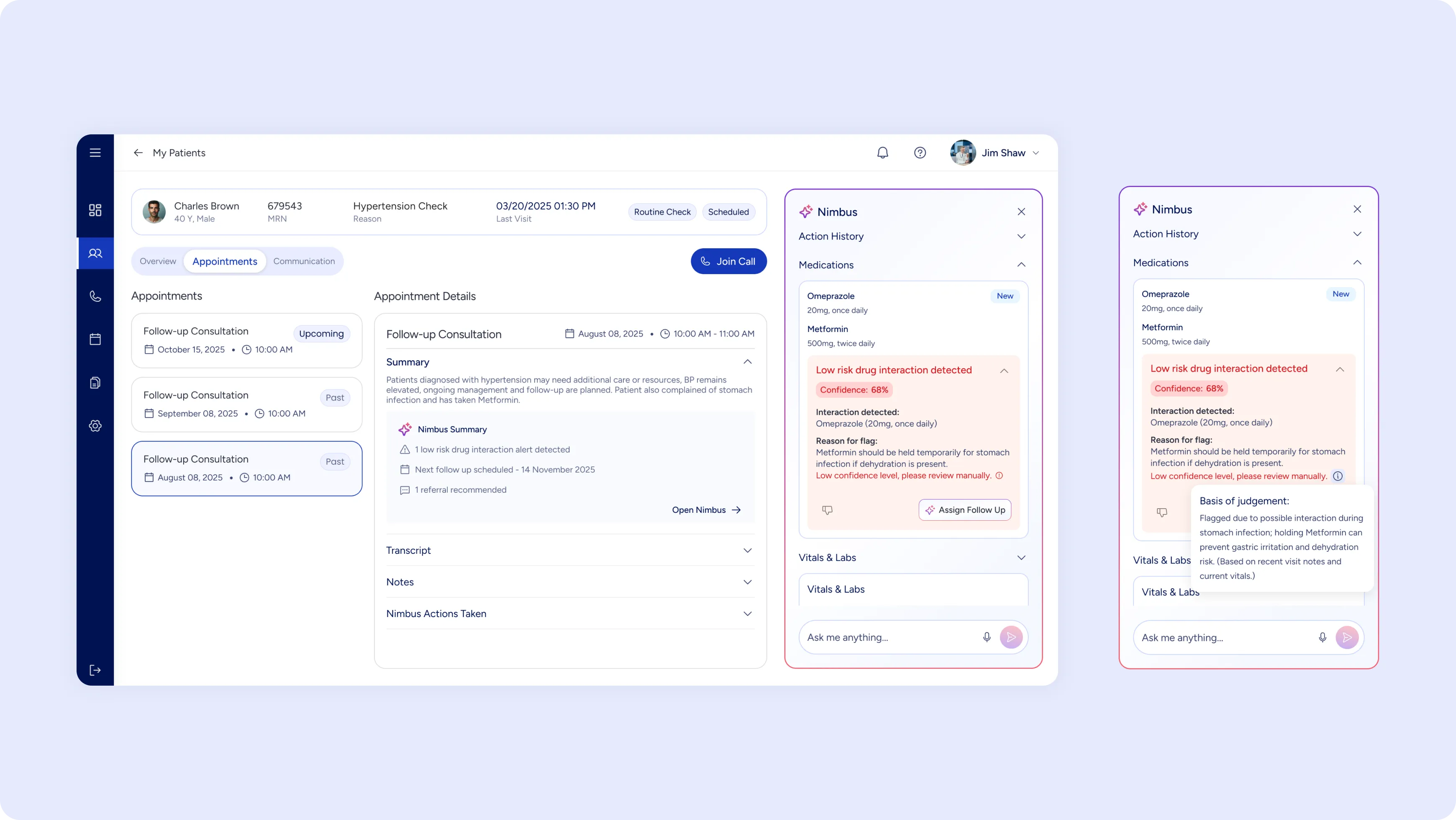

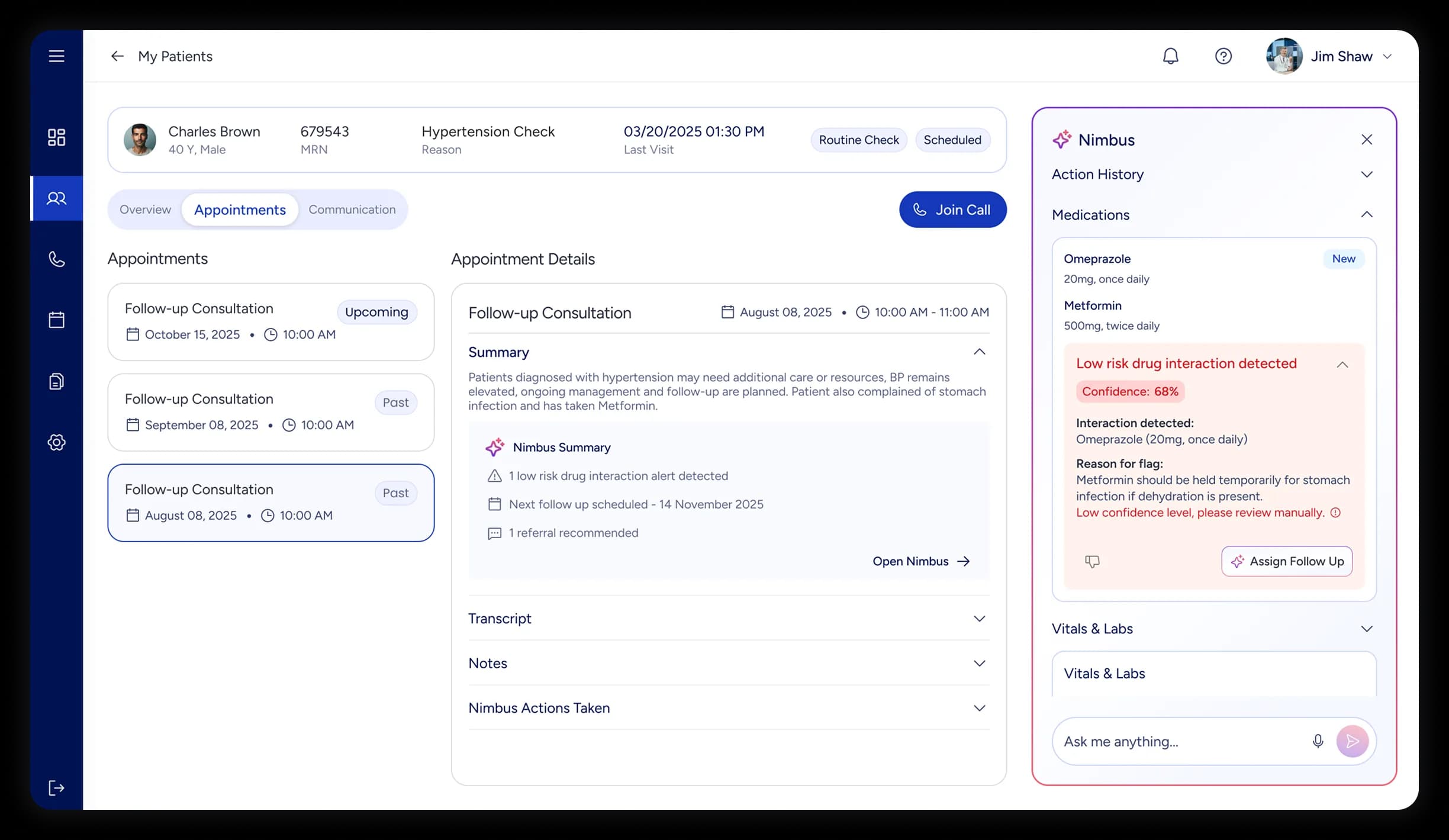

Highlighted follow up recommendation for patient as per last appointment along with reason and sources to support the high confidence suggestion.

A recommended action with lesser confidence while providing the reasoning and actionable CTA to take the action right away if approved by staff.

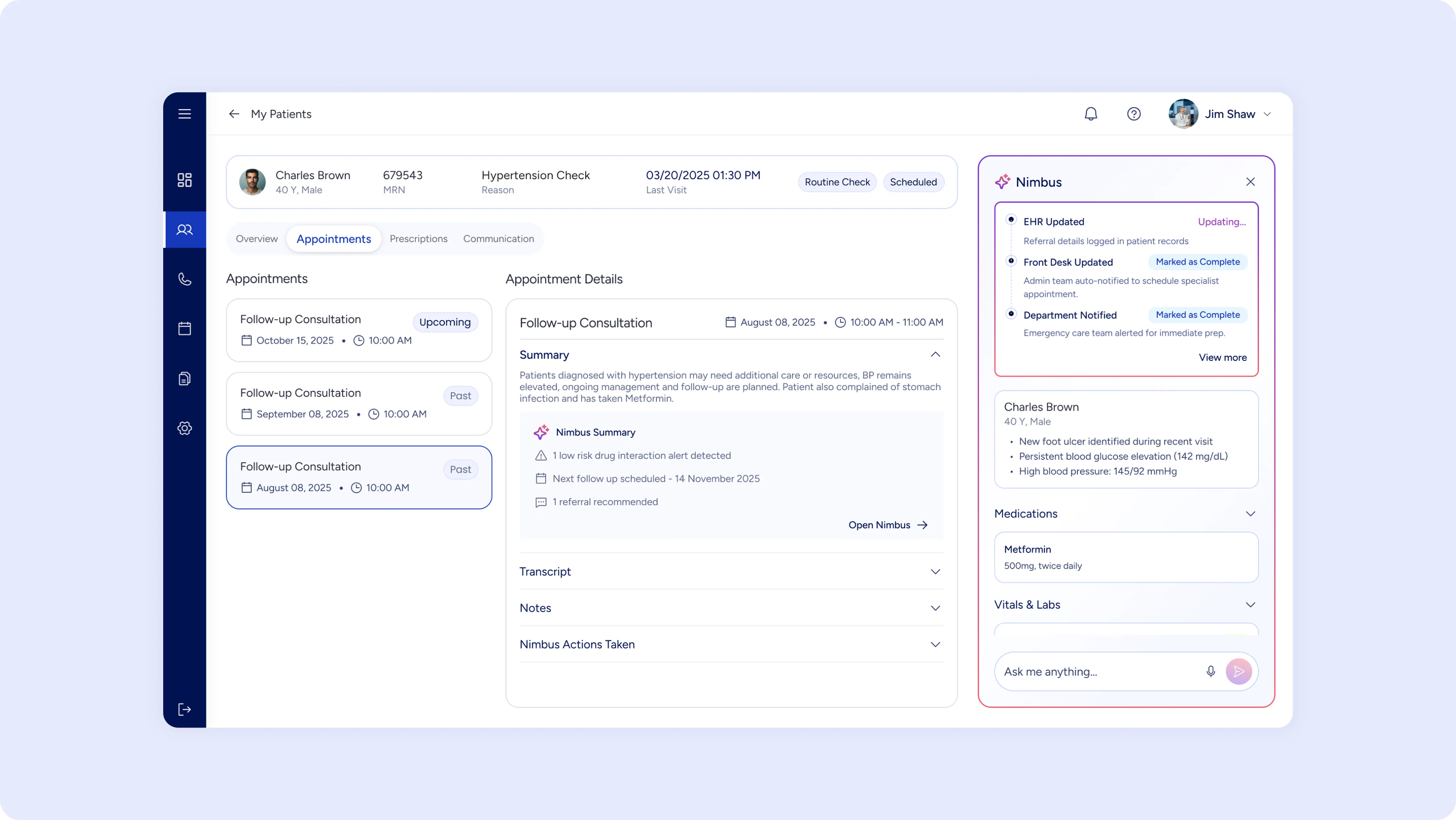

A timeline view of all the tasks the agent completes with real time status of each stage to provide an overview of its working.

Control & Customization

When unsure, the AI always defers:

- "Can't verify med list — recommend manual check."

- "Low confidence (62%) — please review manually."

- "Data conflict: Diabetes diagnosis but no recent labs. Clarify first?"

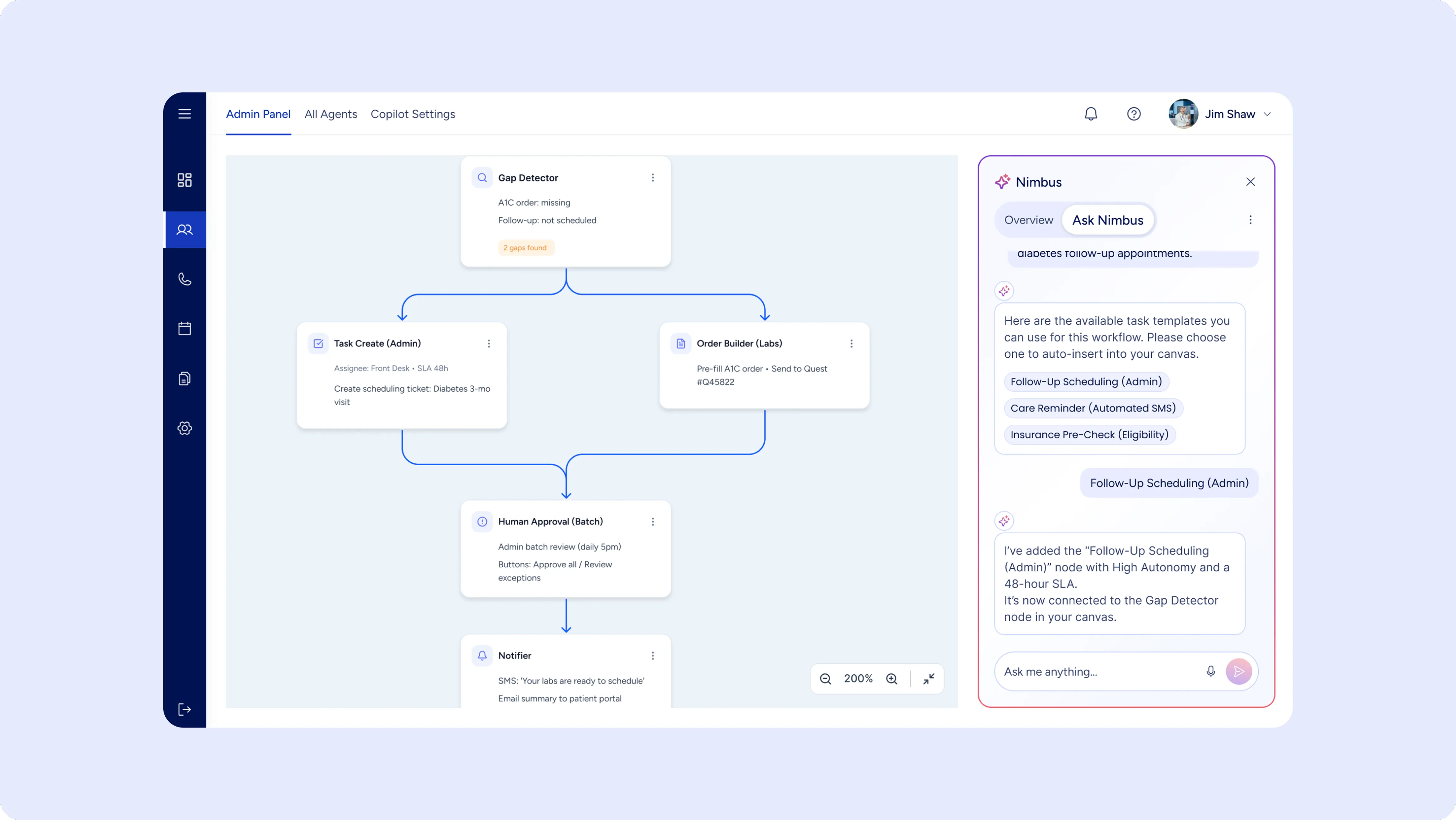

Using the copilot to allow staff to customize their agentic workflows based on their practice’s requirements.

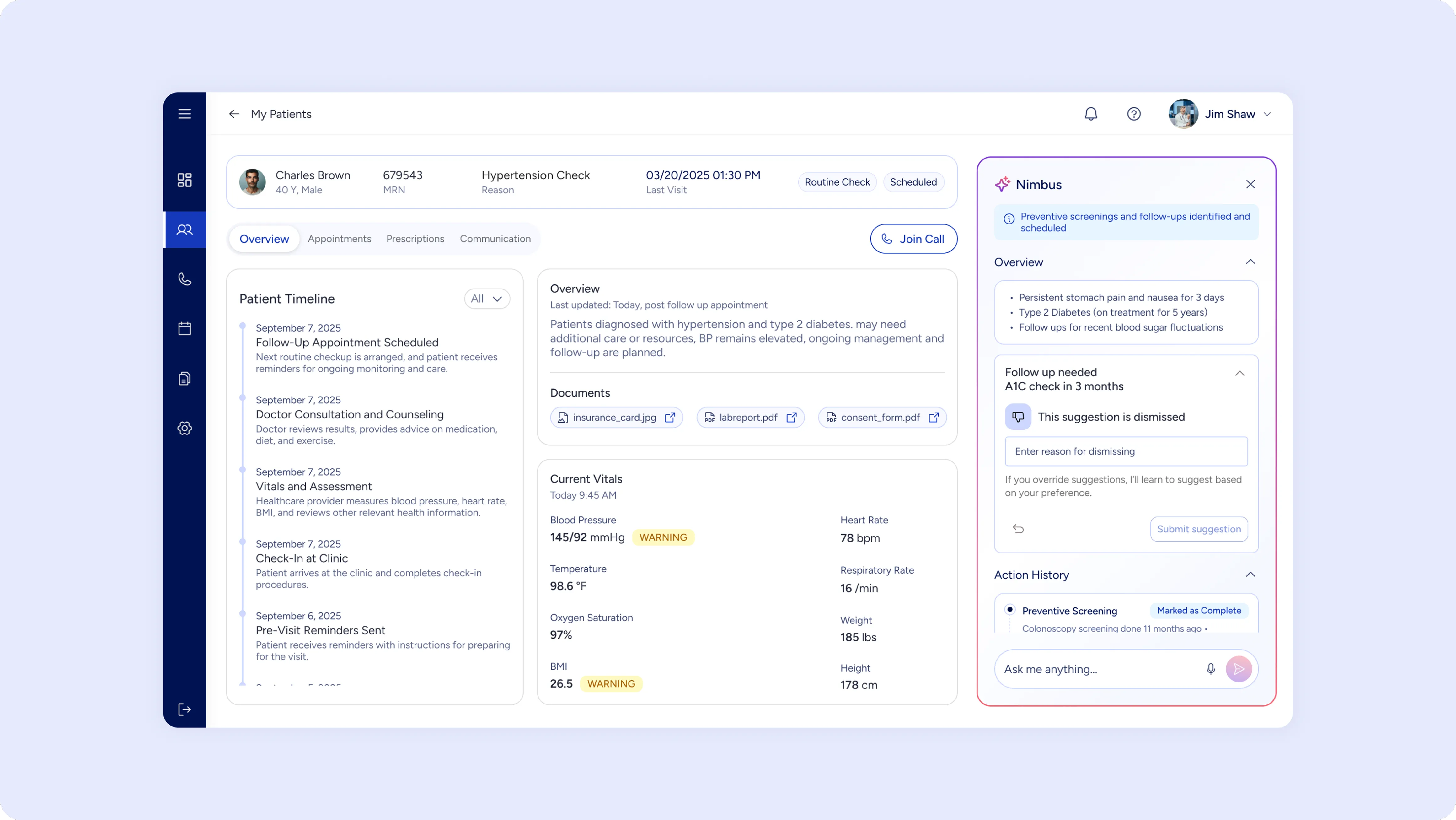

Allowing staff to hide and override any suggestions made by AI based on their preferences while training AI for providing future recommendations.

Handling Uncertainty

To keep humans firmly in charge, clinicians can:

1. Reject or edit suggestions ("We don't do this in our practice.")

2. Define practice patterns (never refer to X specialty, always use Y interval).

3. Adjust autonomy levels — conservative → balanced → aggressive.

4. Pause/Resume AI — disable if overwhelmed or reviewing.

Low confidence suggestions highlighted with insight into how AI flags a recommendation which requires user intervention.

All deferred actions flagged with priority and specific reason for confirmations required from staff.

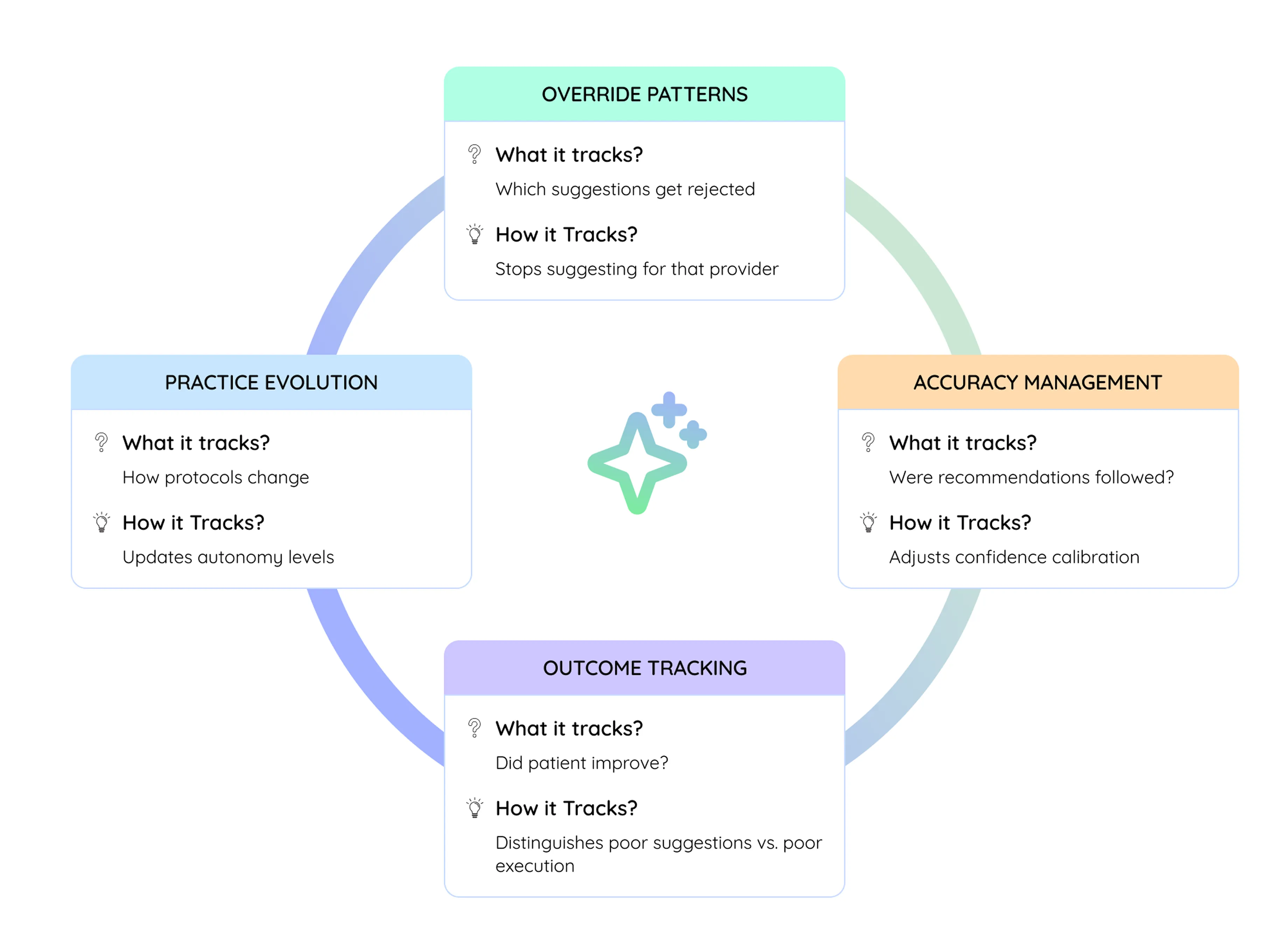

Adaptive Learning

The AI continuously evolves through four feedback loops:

/ PHASE 3 - STEER

Measurement, Governance, and Evolution

Designing trustworthy behavior was only half the task. The real test began when the AI entered live clinical workflows.

Once the prototype began running in live clinical workflows, our focus shifted from design to measuring success, governance and evolution. We needed to ensure the AI could keep learning safely — improving where it was effective and pulling back where confidence dipped.

Success Metrics (Three Levels)

Level 1: AI Accuracy

- Protocol match accuracy: When AI identifies protocol, is it correct?

- Gap detection accuracy: When AI flags gap, is it real?

- Confidence calibration: When AI says 90% confident, is it right 90% of the time?

Level 2: User Behavior

- Acceptance rate: % clinicians accept suggestions

- Override patterns: Which suggestions get rejected? (Signals design gaps)

- Usage frequency: How many times per clinician daily?

Level 3: Clinical Outcomes

- Follow-up completion: % of recommendations actually completed

- Care gap closure: How many gaps get addressed?

- Readmission rate: Preventable readmissions decrease?

- Patient outcomes: Do coordinated patients have better health outcomes?

- Time saved: Time spent on follow-up coordination

Governance: Alert Escalation Framework

We implemented a five-level governance model to monitor and manage the AI's behavior in production. Each level defined a clear trigger and escalation path, ensuring no suggestion could silently go wrong.

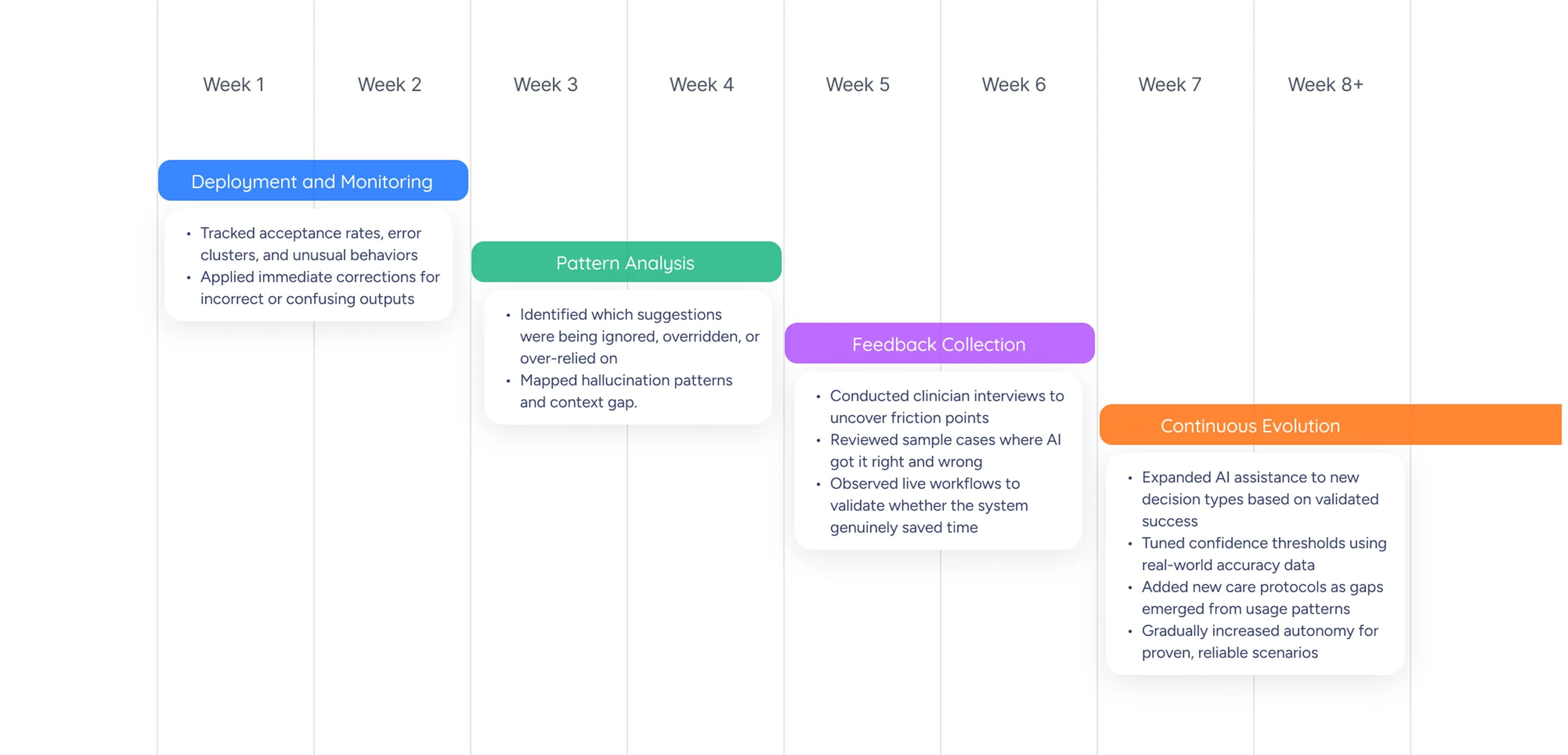

Continuous Refinement Cycle

We treated post-launch learning as part of the design process itself. Over an eight-week refinement cycle, we used structured observation, analytics, and clinician feedback to drive controlled iteration.

Why This Approach Worked

Unlike traditional UX efforts that end at launch, this project required ongoing design governance. The AI's value depended not only on its first version, but on how responsibly it evolved over time.

By building safeguards, feedback loops, and learning thresholds into the design, we ensured the AI could evolve in a controlled, clinician-trusted way — improving its precision without compromising patient safety.

IMPACT & TAKEAWAYS

Over time, clinicians began describing the AI as "a colleague who never forgets." They trusted it because it respected their judgment, learned quietly from their feedback, and only spoke up when it mattered.

The system didn't just automate tasks — it reshaped the relationship between people and technology.

It brought clarity to complex workflows, reduced mental load, and made AI feel less like a feature and more like a partnership.

This project became the foundation for how we now approach every AI engagement — through Sense, Shape, and Steer — a framework for building AI that evolves, earns trust, and works as part of the team.

1500+ healthcare UX projects completed for startups to industry leaders

Check out our other Healthcare Case Studies

Simplified Medicaid Application Process to Enhance Acceptance Rates

Radical EHR Solution That Humanizes Patient-Provider Interactions

Ready to Start Up, Scale Up or Streamline Your HealthTech UX?

Schedule a conversation with our UX experts and discover how we can help you design and build faster than you previously thought possible.