UX Design for

Healthcare AI

Powerful tech will only

take you so far. Make AI experiences your moat.

Design AI Your

Users Will Love

Most AI initiatives fail not from technical limitations, but from misaligned expectations and poor human-AI interaction design.

You can build sophisticated AI-powered products, but if users can't verify the reasoning, maintain control, or integrate them into their workflows — they won't adopt them.

We help you build products users actually want to use. Smarter, more intuitive experiences that don't just do clever things — they make work feel effortless. That's your competitive moat.

We've shipped 1,500+ projects and touched the lives of more than one million patients, clinicians, and providers. When you partner with Koru, it's like having your own dedicated UX department — one that's navigating the bleeding edge of AI and agentic design with the industry's leading healthcare technology companies.

Six Product Problems AI Helps Solve

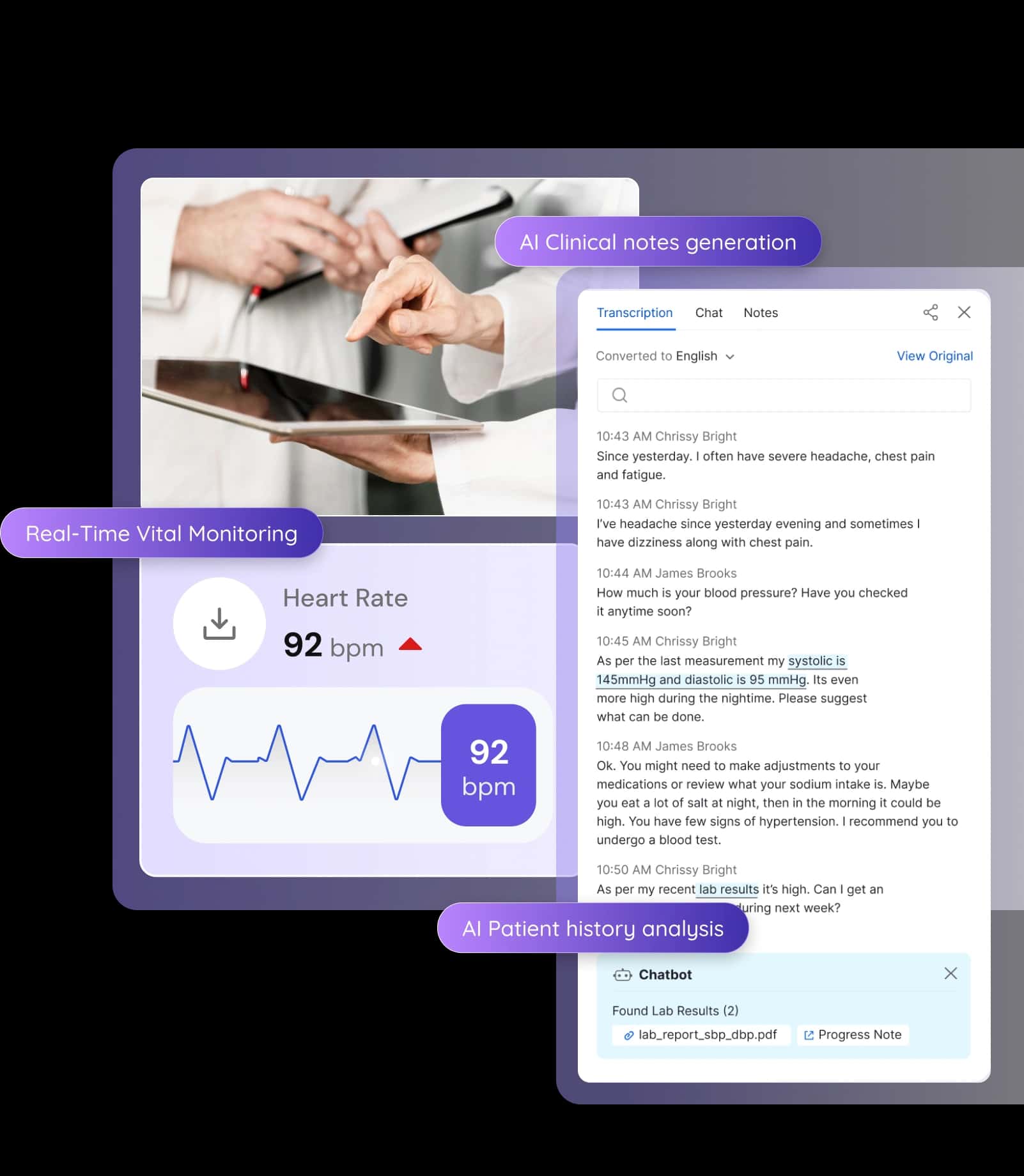

Information Overload

The information your users need exists somewhere in the system.

They just can't find it when they need it - buried in years of records, scattered across platforms, hidden in untagged documents. So they either miss critical details or waste time hunting. What if your users found what matters without hunting for it?

Manual Work

Your users type information that already exists somewhere else. They copy data between systems, repeat the same tasks dozens of times daily.

Each instance feels small, but the accumulated friction exhausts them and pulls focus from work that actually matters. What if your users stopped typing what already exists?

Personalization Gaps

Most products offer one interface for everyone - maybe with configuration options buried in settings.

Beginners drown in complexity they don't understand yet. Experts wade through guidance they've outgrown. Everyone spends time tweaking preferences to make your product work how they need it to. Imagine if your product adapted to each user automatically.

Adoption Barriers

You've had to choose - force everyone through extensive training, or oversimplify and frustrate experienced users.

New users get stuck in the middle, not proficient enough to be effective but discouraged enough to abandon features or invent workarounds. What if your new users accomplished real work from day one?

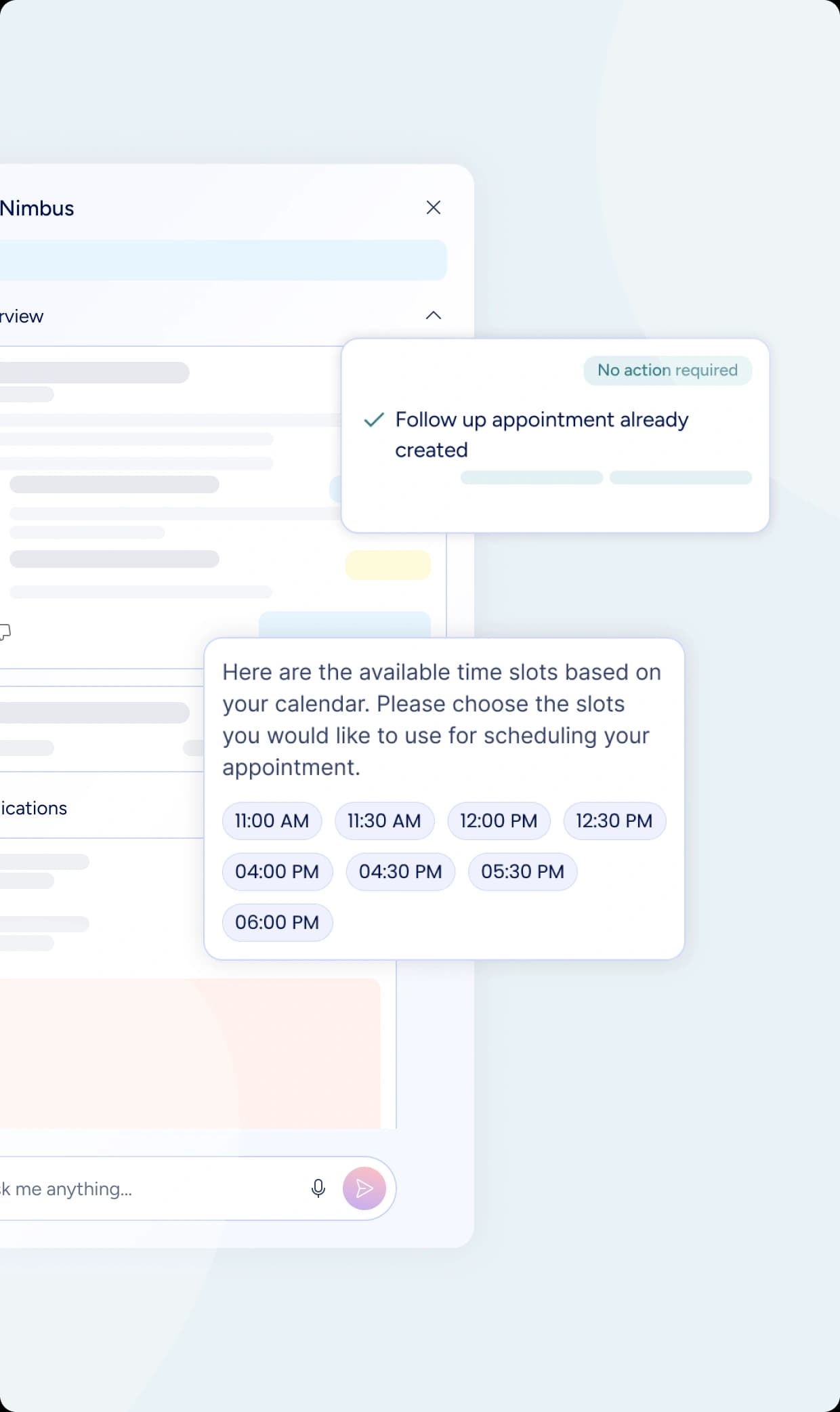

Cross-System Fragmentation

You've built integrations and APIs to connect systems - but users still jump between platforms to complete tasks.

Multiple logins. Information scattered everywhere. Context lost with every switch. Your product becomes one more system in an already fragmented workflow. What if your users stayed in one place while AI worked across systems on their behalf?

Transfer of Expertise

Training programs and documentation only go so far. Your best users' expertise - pattern recognition, workflow optimizations, judgment built over years - stays locked in their heads.

New team members take months or years to develop it, if they ever do. Imagine if your entire user base worked like your best users.

Why Conventional UX Agencies Struggle With AI

(And What Makes Koru Different)

AI UX isn't conventional product design with chatbots added. It requires

fundamentally different thinking:

We design behavior, not just interfaces - how intelligence acts, when it intervenes, what autonomy it has.

We design trust between multiple parties - users, AI, organizations, and regulators.

We know you can't "finish" AI UX - systems learn and drift, requiring perpetual governance.

We shape how systems learn - designing feedback mechanisms and how products become smarter.

We design for unpredictability - varying confidence, unanticipated edge cases, inevitable failures.

We treat explainability as core interaction design - why AI recommended this, what data it used, how users verify it.

We test failures, not just success paths - what happens when AI gets it wrong, how users recover.

We design autonomy on a spectrum - context-dependent, dynamically adjusted.

We deliver different artifacts - behavior specifications with confidence thresholds, trigger conditions, fallback mechanisms.

We do prompt engineering - crafting constraints and guardrails that shape AI responses.

When It Comes To AI, UX Is The Product

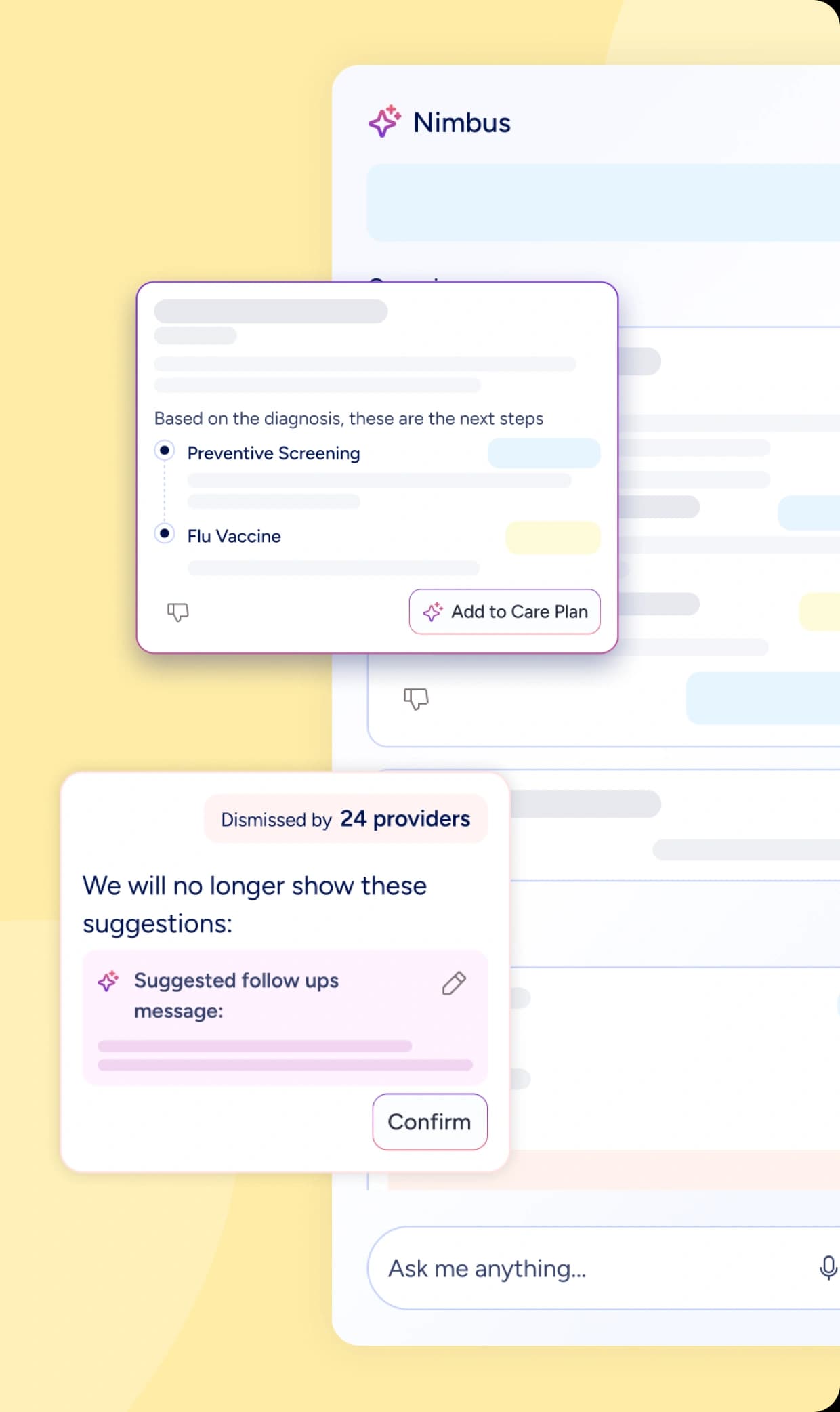

AI Copilots

Your users face complex decisions - but AI that removes their judgment creates accountability problems.

How do you design systems that improve decision quality without taking authority away from the people responsible for outcomes?

We help you define how to present options and trade-offs. Design how to show evidence strength and confidence levels - so users know when AI is certain versus guessing. Map how to handle disagreement between AI recommendations and user judgment.

We design explanations that reveal why AI recommends what it does, preserve user authority over final choices while surfacing information they might miss, and address liability by making clear who decided and why.

Your users make better decisions backed by more complete information - while accountability stays exactly where it belongs.

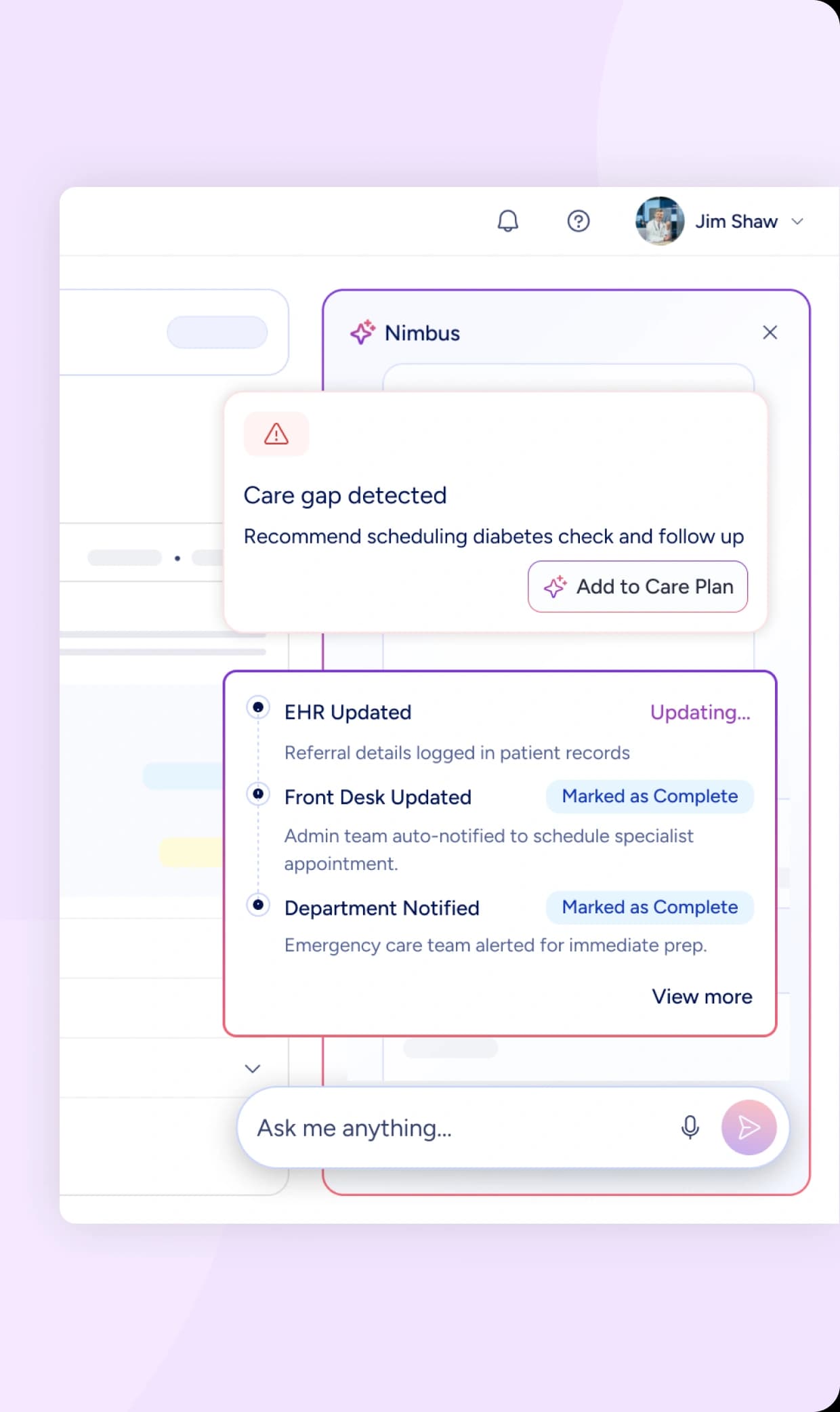

AI Agents

Your agent needs to act independently - but you can't just let it loose. One bad decision cascades into serious problems.

How do you design systems autonomous enough to be useful, controlled enough to be safe?

We help you define specific guardrails - what your agent can touch and what it can't. Map confidence to autonomy - when it acts alone, when it asks permission, when it escalates.

For complex workflows, we orchestrate multiple specialist agents - each handling its domain, handing off context cleanly, escalating when it hits limits. We design observability so you see what they're doing in real-time, and rollback mechanisms for when they make mistakes.

You understand exactly what your agents will do, when they'll escalate, and how you can intervene - which is why you'll trust them.

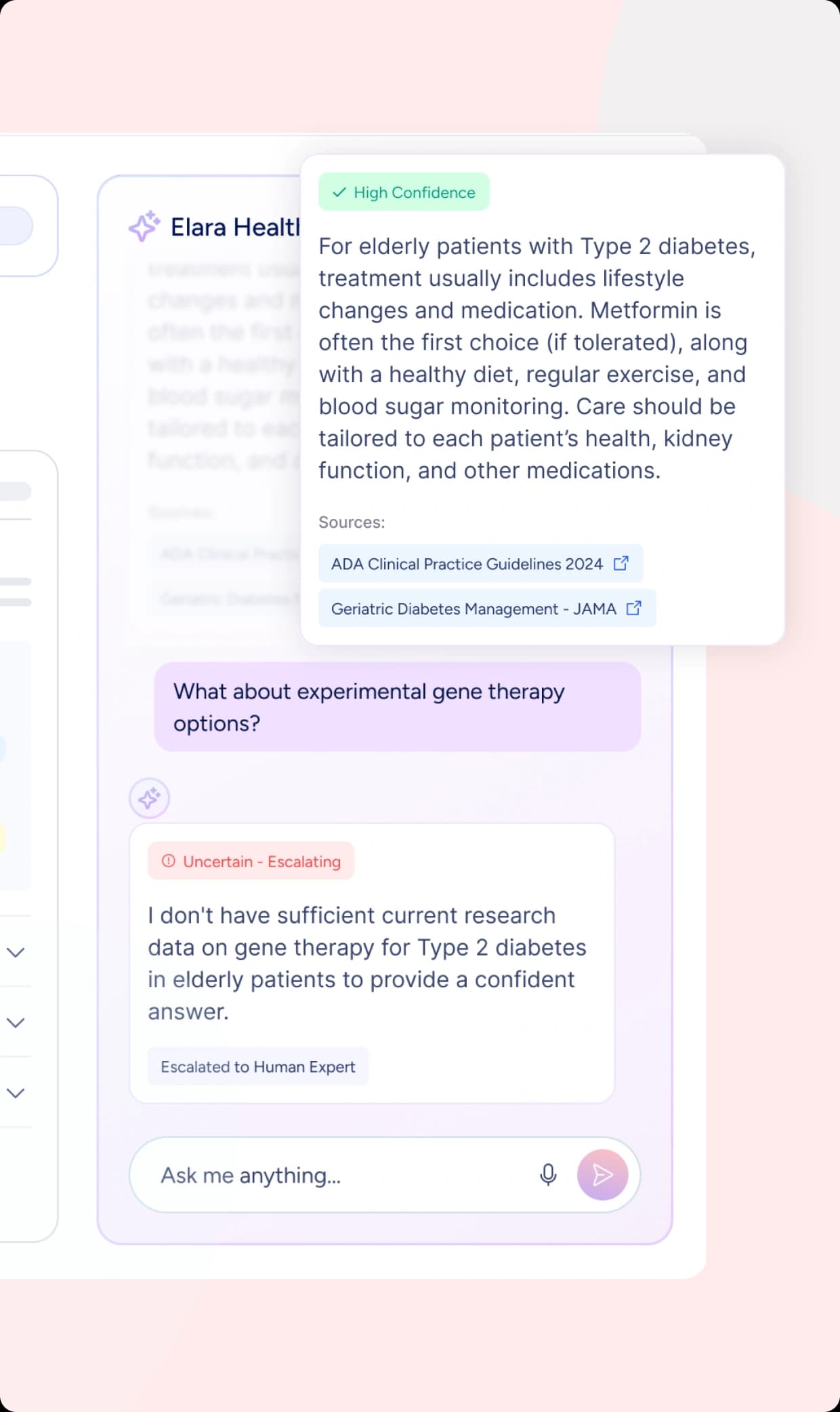

AI Assistants & Chatbots

Your users need instant answers - but conversational AI that can't cite sources or admit uncertainty erodes trust.

How do you design systems that genuinely understand context, not just pattern-match responses?

We help you define conversation boundaries - what your assistant can answer confidently versus when it says I don't know. Design tone that adapts to context. Map how it handles ambiguity - when to ask clarifying questions versus when assumptions are safe.

We design memory that maintains coherence while forgetting appropriately, citation mechanisms that ground responses in real information, escalation paths when it hits limits, and error recovery when it misunderstands.

Your support burden drops while users get instant access to institutional knowledge - with the credibility they need to act on what they learn.

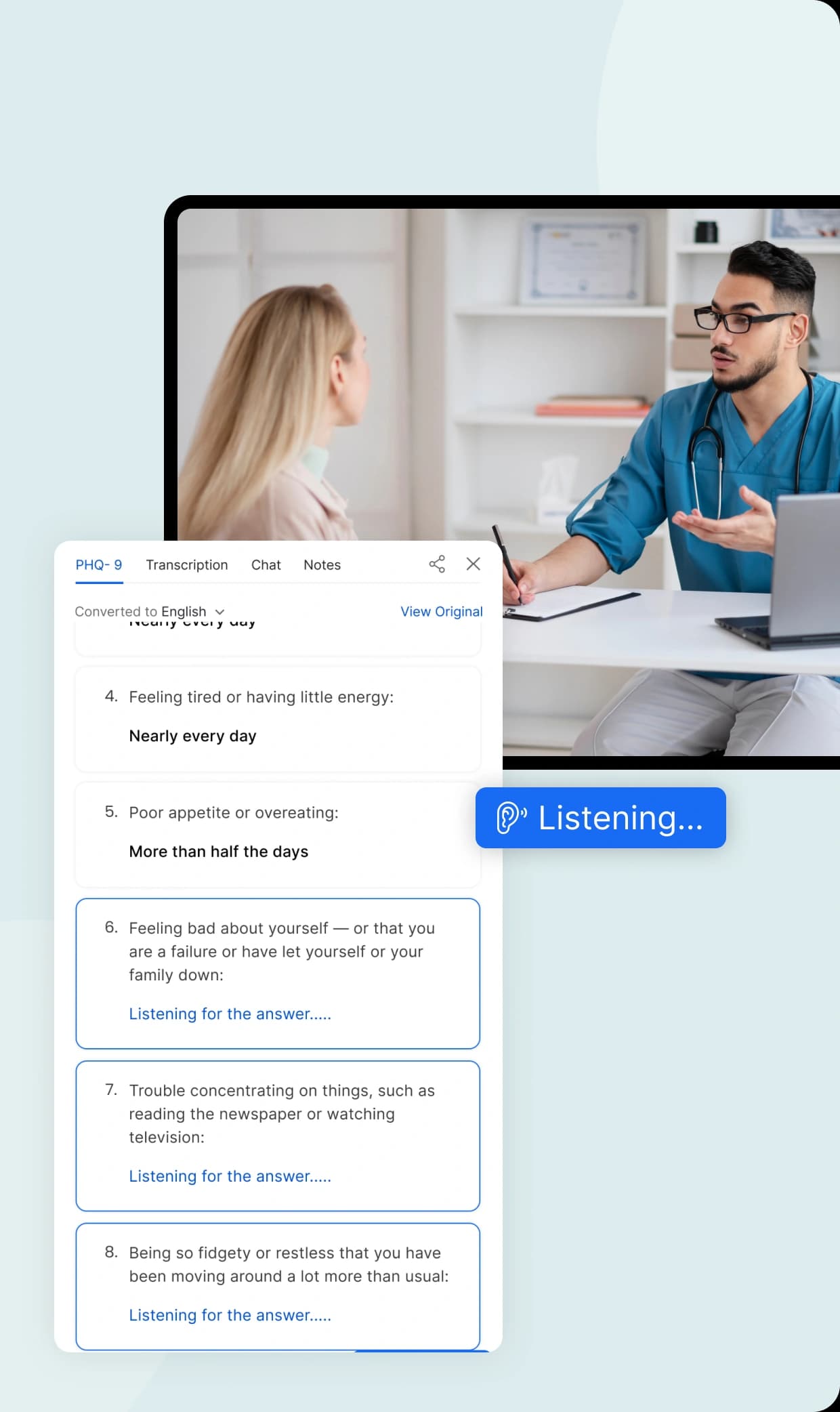

Ambient AI

Your product needs to adapt to each user - but asking them to configure preferences adds friction.

How do you design systems that learn from behavior without feeling invasive or creepy?

We help you define what to personalize and what to leave standard. Map privacy boundaries - what data the system observes and what it ignores. Design when to surface intelligence versus when to keep it invisible.

We design opt-out mechanisms that give users control without requiring configuration, and awareness patterns for when users should know AI is working versus when it should fade completely.

Your product adapts automatically to how each user works - no configuration burden, no privacy concerns, just experiences that feel personally tailored.

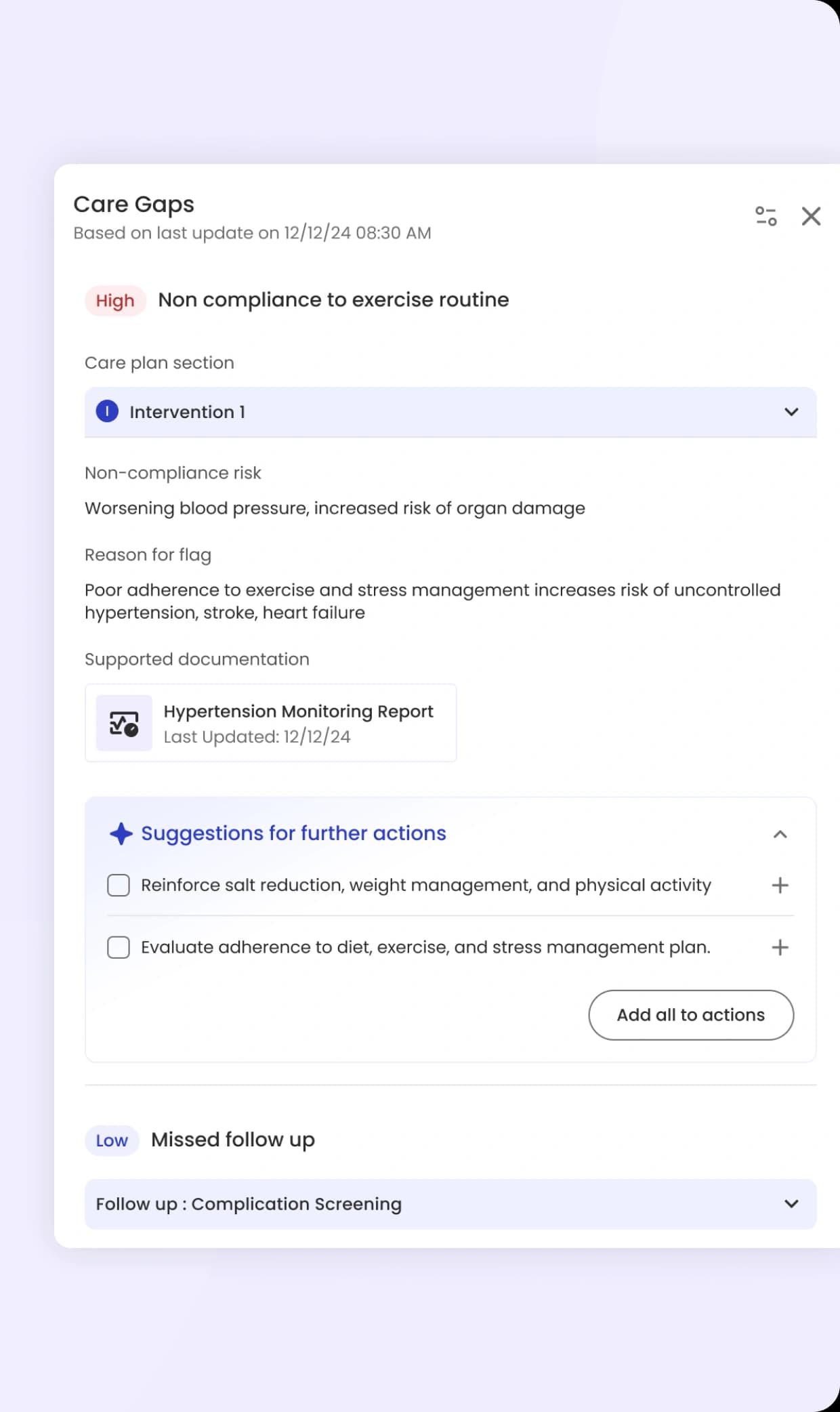

Predictive/Proactive AI

Your system can spot patterns before they become problems - but too many alerts and users ignore them all.

How do you design systems that enable early intervention without creating alert fatigue?

We help you define alert thresholds - when to notify versus when to stay quiet. Map false positive tolerance - what's acceptable in different contexts. Design how to explain predictions and show evidence.

We define time horizons - whether to predict an hour or a week ahead - and calibrate sensitivity to user context, because a busy shift needs different thresholds than a routine day. Map what to do with predictions - suggest action, automate, or just inform.

Your users intervene early on issues that actually matter, ignoring the noise because you've eliminated it.

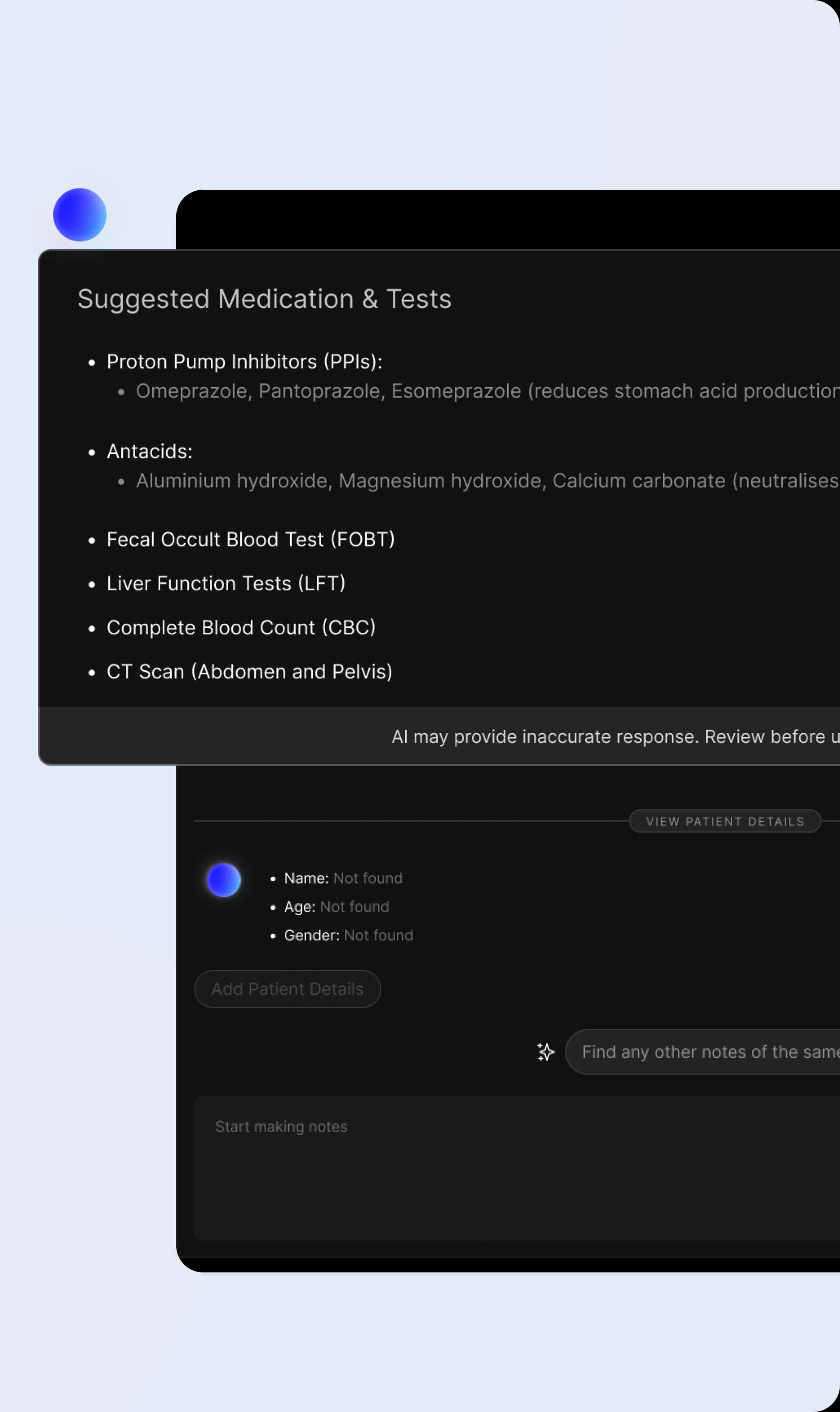

Generative AI

Your users need AI to create content - but systems that hallucinate facts or ignore ethical boundaries become liability risks.

How do you design systems that generate helpful content without creating compliance nightmares?

We help you define content boundaries - what's off-limits, what requires human review. Design verification workflows for checking accuracy before content goes live, and citation mechanisms that show where information came from.

We design editing workflows that make refinement easy without forcing users to start over - because they won't adopt all-or-nothing tools. Add guardrails for sensitive content, brand voice consistency, and audit trails for accountability.

Your users eliminate repetitive writing with quality output - while you maintain the controls needed to manage risk and compliance.

Decision Support Systems

Your users face complex decisions - but AI that removes their judgment creates accountability problems.

How do you design systems that improve decision quality without taking authority away from the people responsible for outcomes?

We help you define how to present options and trade-offs. Design how to show evidence strength and confidence levels - so users know when AI is certain versus guessing. Map how to handle disagreement between AI recommendations and user judgment.

We design explanations that reveal why AI recommends what it does, preserve user authority over final choices while surfacing information they might miss, and address liability by making clear who decided and why.

Your users make better decisions backed by more complete information - while accountability stays exactly where it belongs.

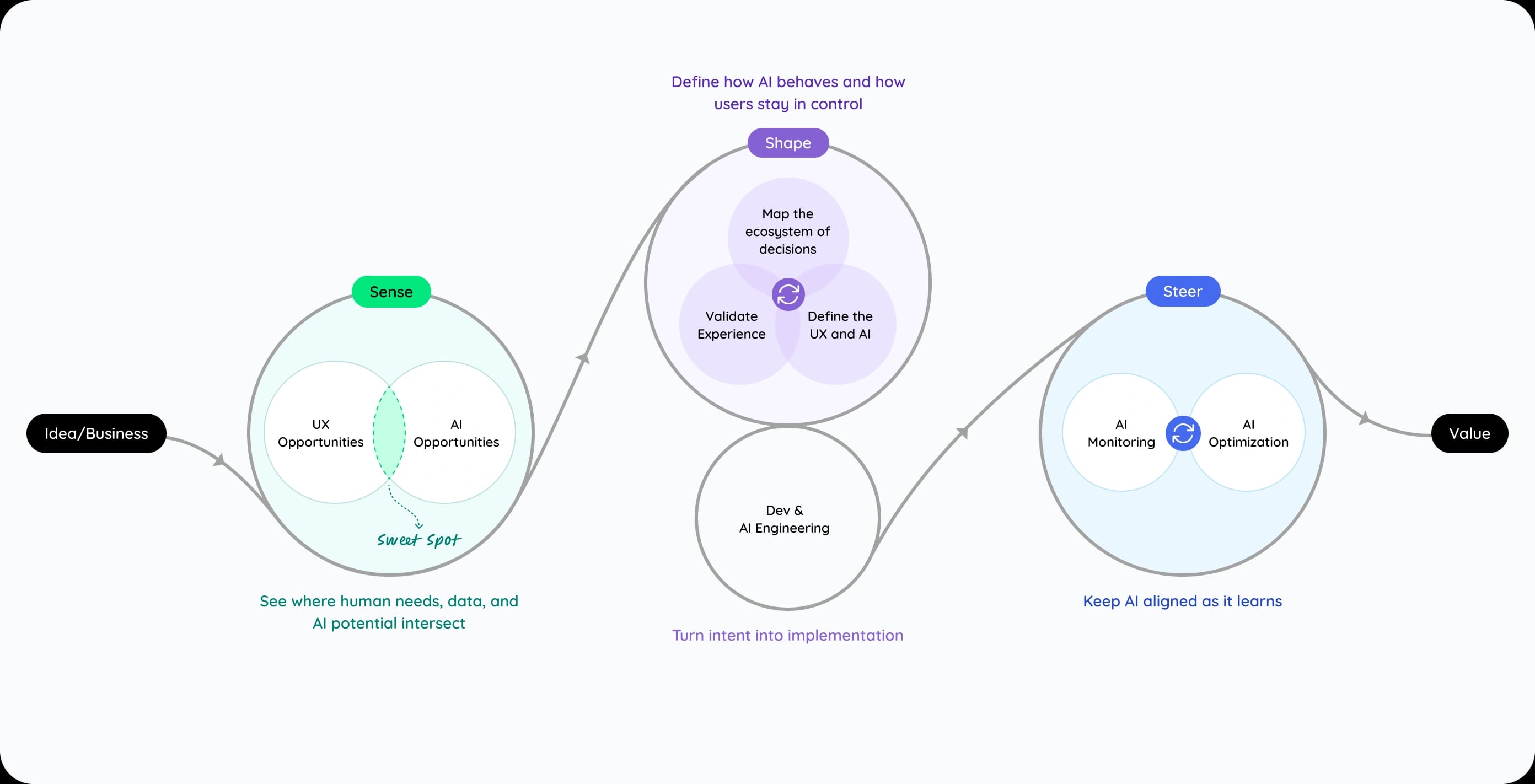

The Sense-Shape-Steer Framework

A closed-loop approach to creating AI products that keep improving after launch.

From sensing opportunities to shaping behavior and steering real-world performance, every cycle brings the system — and the experience — closer to its intent.

The three phases include: Understanding where AI can enhance the UX (Sense). Designing how the AI should behave (Shape). Governing and evolving the experience (Steer). Each phase informs the others. The cycle repeats as your product evolves.

Get The “Sense-Shape-Steer” UX for AI Training (Workbook & Video Walkthrough)

SENSE

Before we design, we listen

(to users, data and context)

When you come to us with an AI application — whether it's an agent, copilot, chatbot, or decision support system — you typically know (or strongly suspect) AI is the right approach. Our job is to help you discover how to do it right.

This is highly collaborative. Your team knows the domain, business constraints and data realities. We bring structured research methods and pattern recognition from designing AI across healthcare products. ”Sense” is all about laying out all the pieces —constraints, capabilities, autonomy levels — so when we move to Shape, we know what we’re working with.

Discover the UX Opportunity

Understanding the human problem and workflows

Before we jump into models or data, we start with people.

We dig into how work actually happens today — where effort piles up, what slows users down, and what success really looks like from their side.

We map Jobs-To-Be-Done to surface the tasks your users need to accomplish:

Identify friction patterns: information overload, manual work, personalization gaps, adoption barriers, cross-system fragmentation, expertise that doesn't scale

You're in the room as we synthesize findings on Miro boards in real-time

Your product team sees patterns emerging

In a working session, we score opportunities across:

Business impact: What moves the needle on efficiency, cost, or revenue?

User trust: Where does AI intervention feel natural vs. intrusive?

Technical feasibility: What can be built realistically with your data and current architecture?

This shared scoring helps teams see where AI will have the most meaningful impact — and ensures everyone’s aligned on why a particular direction is worth pursuing.

Discover the AI Opportunity

Understanding what's technically possible and what constrains it

Understanding what’s technically possible is just as critical as understanding user needs. Here, we explore the boundaries and potential of your AI — what it can do today, what might still be science fiction, and what trade-offs come with each path.

We look under the hood to understand the AI itself — how it learns, where it struggles, and what it needs from your data to deliver consistent value.

Model capabilities:

What can the existing AI model(s) do vs. what's still science fiction?

Architecture options: Thin LLM wrapper? Fine-tuned model? RAG-based? Custom training?

Data infrastructure: What ground truth exists? Is it accessible? Where are data gaps and bias issues?

Technical and cost integration points:

What existing systems AI gets access to?

Are the computational costs scalable?

Risk and expectations:

What level of accuracy is acceptable? What happens when the AI is wrong?

What is projected CAIR ?

This determines confidence levels, what you can promise users, and what constraints shape the experience.

By the end of Sense, we’ve connected the dots between what users need and what AI can reliably deliver. We now know where AI fits best, what risks it carries, and what kind of experience it should enable.

Next, we bring it all together — designing how the AI should behave, interact, and earn trust in Shape.

SHAPE

Once we understand, we design

(to define how AI behaves and how users interact with it)

This is where ideas take form.

We define how the AI should act, respond, and communicate — when it should take initiative, when it should pause, and how it should earn the user’s trust.

We explore the balance between autonomy and oversight, shaping tone, visibility, and control so the AI feels powerful yet predictable.

Here, design moves fast — we prototype AI-driven interactions that mimic real model behavior, then refine them through iterative, AI-specific testing.

Each round helps us understand not just what works, but why users trust it (or don’t).

Map the Ecosystem of Decisions

Understanding how AI fits into real workflows — and where humans must continue to lead

We start by mapping how work actually happens: who decides what, when, and with which information.

This reveals where AI can assist, automate, or amplify human judgment — and where it shouldn’t interfere.

Trace decision flows

Who needs what information, when, to make which choices?

Which moments are repetitive, data-heavy, or delay-prone (ideal for AI)?

Which require context, empathy, or expertise (best left human-led)?

Define autonomy boundaries

Low-risk: High AI autonomy with light human oversight

Medium-risk: AI suggests, human approves

High-risk: Low AI autonomy, high human control

Surface data trust gaps

Where data quality, latency, or coverage undermines confidence

Map AI into workflows

We visualize where AI adds clarity or speed — when it listens, recommends, summarizes, or acts — and how these moments connect to user intent.

Design interaction logic

Through prompt and context engineering, we define how AI understands intent, applies knowledge, and communicates tone and confidence.

We outline when it acts autonomously, when it pauses for input, and how it explains uncertainty.

Design feedback loops

Define how the AI will learn from users — what signals it collects (edits, confirmations, rejections), how those signals feed the model, and where human review is required.

These behavior blueprints guide early AI prototypes, where we simulate model behavior to explore how humans and AI share decisions in context.

Validate Experience

Testing usefulness and trust before engineering builds the real thing

Traditional mock-ups can’t capture how AI behaves in motion — how it reasons, hesitates, or learns from users.

We mimic AI behavior through simulated workflows that approximate real model reasoning and responses.

Why static mock-ups fail for AI

Can’t show how AI handles unexpected inputs

Can’t reveal if users understand its reasoning

Can’t test tolerance for mistakes

Can’t demonstrate confidence variation

What we do instead

AI workflow prototypes: Mimic AI reasoning, tone, and confidence — even before the model is live

Wizard-of-Oz testing: Simulate AI to study real reactions without full engineering effort

Failure mode testing: Expose low-confidence or incorrect responses to understand recovery expectations

Trust testing: Test if users grasp why AI suggested something and feel confident acting on it

Autonomy testing: Find the right balance between AI initiative and human oversight

Feedback loops

We test how users respond to AI suggestions — what they correct, reject, or confirm — and whether these signals can drive meaningful learning.

Each iteration helps us refine prompts, context, and weighting so that user feedback becomes structured, useful input for the system to improve itself.

By the end of Shape, the AI’s role, tone, and reasoning model are clearly defined and validated — forming the behavioral blueprint for a trustworthy, human-centered AI experience.

Design doesn’t end when AI goes live — that’s when it truly begins to learn.

In Steer, we stay alongside it, watching how real users shape its behavior, how confidence grows, and where trust gets tested.

This is where we make sure the intelligence we designed continues to feel human.

STEER

As intelligence grows, we steer

(to help it stay useful, transparent, and trusted)

AI keeps learning — and so must design.

In Steer, we stay close to how AI behaves in the real world — not to control it, but to guide it.

We observe how it performs, where it hesitates, and how people respond.

Each insight helps the experience grow wiser, fairer, and more reliable as the AI matures.

Steer isn’t about maintenance — it’s about governance through awareness.

We establish the guardrails that keep human accountability visible: how the AI decides, when it escalates, and who’s in control when confidence drops.

It’s how we keep AI trustworthy, not just functional.

Embedded Support

Bringing design intent to life — and keeping it alive.

When ideas move from design to build, intent can get diluted.

We stay embedded with engineering teams to make sure what we designed — clarity, empathy, and confidence — stays intact as AI becomes operational.

Join stand-ups, reviews, and pairing sessions to keep user experience tied to implementation decisions

Collaborate on prompt and context refinement to ensure AI responses align with design intent

Run early feedback cycles with real or simulated users to catch drift between designed and actual behavior

Define shared success metrics — confidence, accuracy, explainability, trust — so everyone measures the same outcomes

We don’t just hand over specs — we help shape how design and AI evolve together.

Measure & Iterate

Improving accuracy, reliability, and trust through continuous feedback

As AI meets real-world use, our focus shifts from designing the behavior to guiding it.

We observe how it performs, learns, and adapts—then tune the experience to stay accurate, dependable, and human-aligned.

That’s the essence of Steer: learning in motion.

Observe real interactions to see where AI helps, hinders, or confuses

Refine thresholds, tone, and messaging as confidence grows or falters

Track how new data affects accuracy and model behavior — because as data changes, so does the experience

Watch how user feedback influences the AI’s learning — what it picks up automatically and what still needs human review

Keep learning safe and intentional — adjust how often the AI learns from feedback and how much human oversight is built in

Evolve communication to explain reasoning and uncertainty more clearly

Monitor for bias, drift, and adoption patterns to ensure fairness and dependability

Designers, engineers, and data scientists stay in rhythm — tuning prompts, flows, and metrics together as patterns emerge

Steer keeps the human touch in an ever-learning system. What we learn here scales into patterns that guide future decisions, across users and products alike.

Every insight from Steer feeds the next Sense — helping us listen better, design smarter, and steer with more confidence the next time around.

Ready to Build AI Users Actually Want to Use?

Whether you're designing a new AI-powered product from scratch or integrating AI into an existing platform, success comes from aligning expectations with technology and designing human-AI interaction right.

Schedule a discovery session with our team of award-winning UX strategists. We'll discuss where you are, where you want to go, and whether we're the right partner to get you there.

What to expect

-

Get clarity around your AI UX challenges - from early prototypes to scaling adoption

-

Consult with designers who've shipped AI experiences for major healthcare providers

-

Discover how mature products are adding AI without disrupting workflows

-

Determine if we're a good fit to collaborate

-

Understand our engagement model, timelines, and investment options

FAQs

Please contact us if you have a question about our process that is not answered below.

You're ready when you have a clear user problem that AI solves better than traditional approaches - and when you have (or can access) the data to train models. We help you assess both during discovery. The key isn't perfect data or fully-baked models; it's a genuine user need that AI addresses.